(This is a follow-up to my post from a little over a year ago, but with some exprimental results).

I sometimes see the claim (usually in comments on a blog post) that infrared radiation cannot warm a water body, because IR only affects the skin surface of the water, and any extra heating would be lost through evaporation.

I have tried to point out that evaporation, too, only occurs at the skin of a water surface, yet it is a major source of heat loss for water bodies. It may be that sunlight is more efficient, Joule for Joule, than infrared due to the depth of penetration effect (many meters rather than microns). But I would say it pretty clear that any heat source (or heat sink) like evaporation which only affects the skin is going to affect the entire water body as well, especially one that is continually being mixed by the wind.

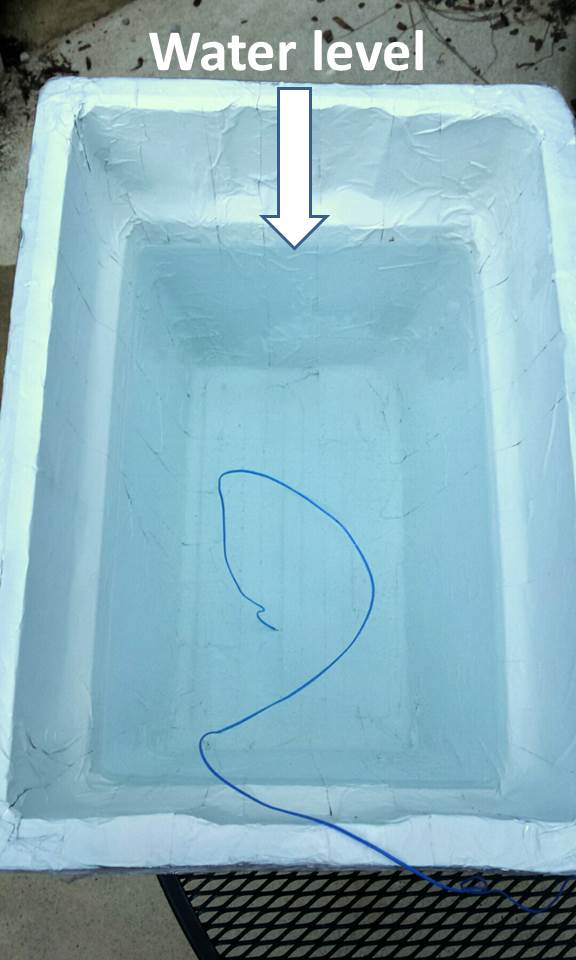

As seen in the second photo, over one of the coolers I put a piece of aluminum flashing painted white with high IR emissivity paint to block IR emission from the “coldest” part of the sky (directly overhead), but small enough to not restrict air flow around it as evaporation (and sensible heat transfer while the water was warmer than the air) cooled the water in both containers:

Two partially water-filled styrofoam coolers, with one partially shielded with aluminum flashing painted with high-emissivity white paint.

My FLIR i7 IR imager said that the sky “effective temperature” directly overhead was about 7 deg F, and the temperature of the aluminum sheet (viewed from below) was close to 80 deg. F, indicating that the presence of the sheet should reduce the radiative energy loss from the water, thus keeping the water warmer than if the sheet was not there (which is what the greenhouse effect does to the Earth’s surface).

Of course, these IR imagers do not provide a good estimate of the broadband IR effective emitting temperature of the sky because they are tuned to the 7.5 to 14 micron wavelength range, which avoids some of the greenhouse gas emission/absorption from the sky. So the true broadband-IR emission from the sky would be at a higher effective temperature.

For example, the most recent ground-based radiometer data from Goodwin Creek, MS the day before my experiment (June 28) showed early nighttime downwelling IR fluxes of about 360 W/m2, which corresponds to an effective emitting temperature of about 48 deg. F, considerably warmer than the 7 deg. F atmospheric window measurement I made with my imager.

Also, my backyard has numerous trees (and my house) blocking the lower sky elevations, with only about 30-40% of the sky visible above.

Nevertheless, the metal sheet in the above photo will still block a portion of the colder sky from view by the warmer water, and it should cause the water in the cooler on the right to be a little warmer than the unshielded water on the left.

So, let’s see what happened to the temperatures:

Overnight temperature data (taken every 5 mins) for water in the coolers, and air temperature between the coolers. A 1-hour trailing average is also shown in the second graph.

As can be seen in the 5 minute temperature data overnight, the cooler with the IR shield stayed a little warmer. The relative faster cooling of the unshielded cooler was slowed when high-level clouds moved in around 1:30 a.m. (as deduced from GOES satellite imagery).

I used this simple energy balance model to deduce that the shielded cooler had about 6 W/m2 less cooling than the unshielded one, to account for the relative weaker temperature drop of 0.4 deg F over about 8 hours.

I’d like to try this again when the air mass is not so humid (surface dewpoints were in the mid to upper 60s F). Also, putting Saran wrap over the water surfaces would eliminate the primary source of heat loss – evaporation.

Note that the temperature effect is small in such an experiment because the atmosphere is already mostly opaque in the infrared, so adding a shield whose emitting temperature is a little higher than what the sky is already producing won’t cause as dramatic of an effect. But under the right conditions (warm water covered with Saran wrap, a very dry atmosphere, and unobstructed view of the sky) much larger differential impacts on water temperature should be possible.

Home/Blog

Home/Blog