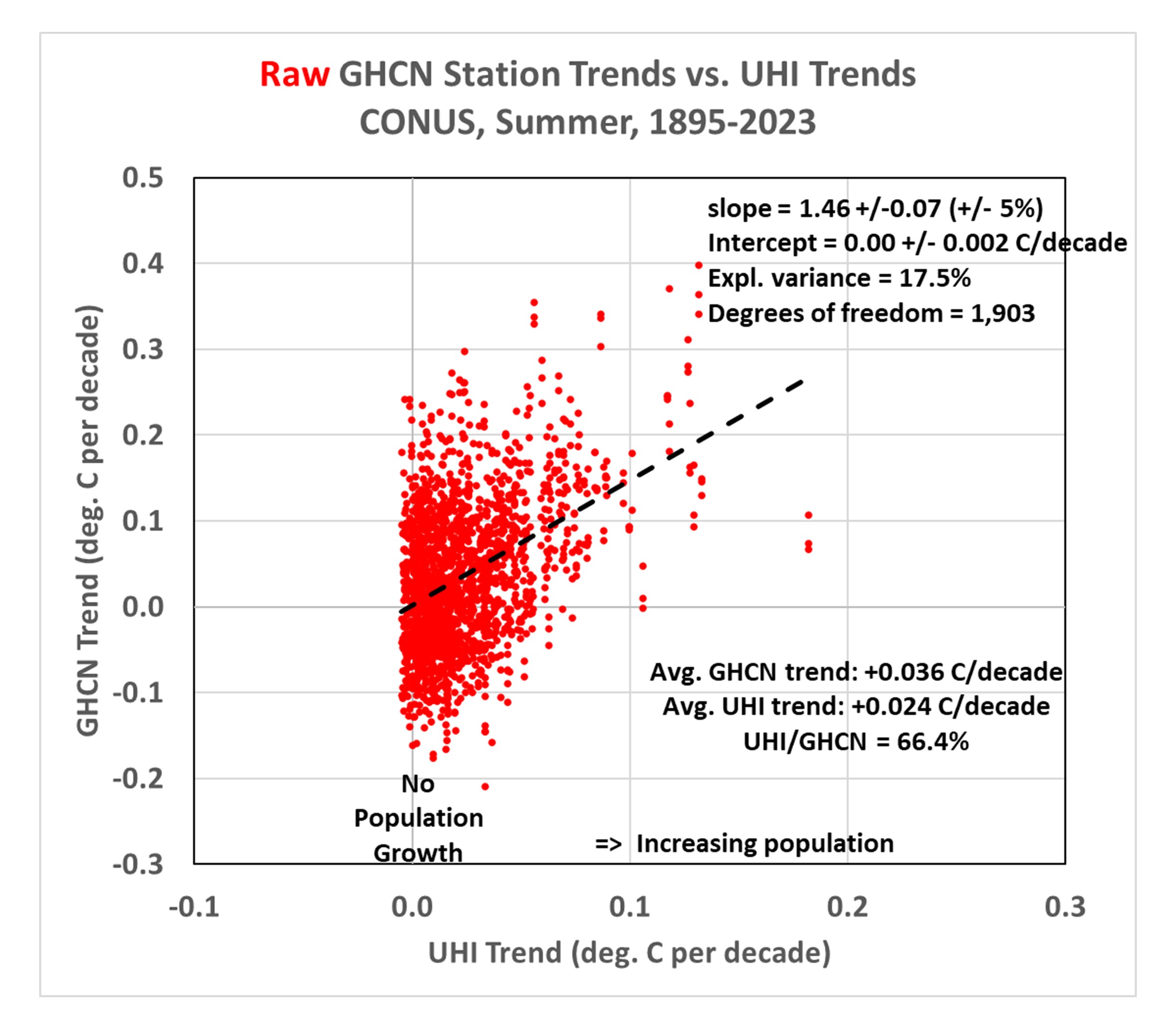

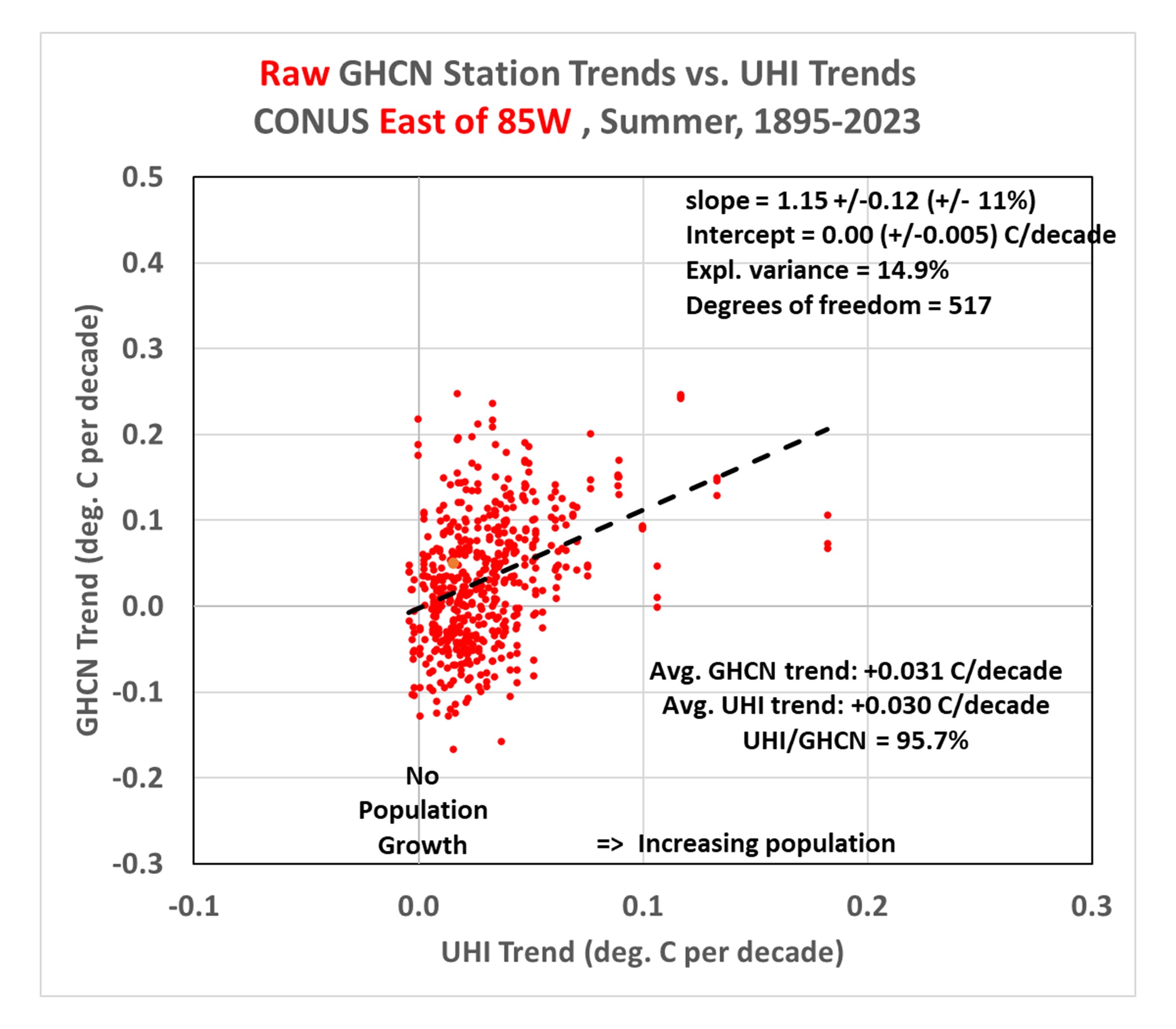

In a recent post I used our new Urban Heat Island (UHI) warming estimates at individual U.S. GHCN stations having at least 120 years of data to demonstrate that the homogenized (adjusted) GHCN data still contain substantial UHI effects. Therefore, spurious warming from UHI effects is inflating reported U.S. warming trends.

The data plots I presented had considerable scatter, though, leading to concerns that there is large uncertainty in my quantitative estimates of how much UHI warming remains in the GHCN data. So, I updated that post to include additional statistics of the regressions.

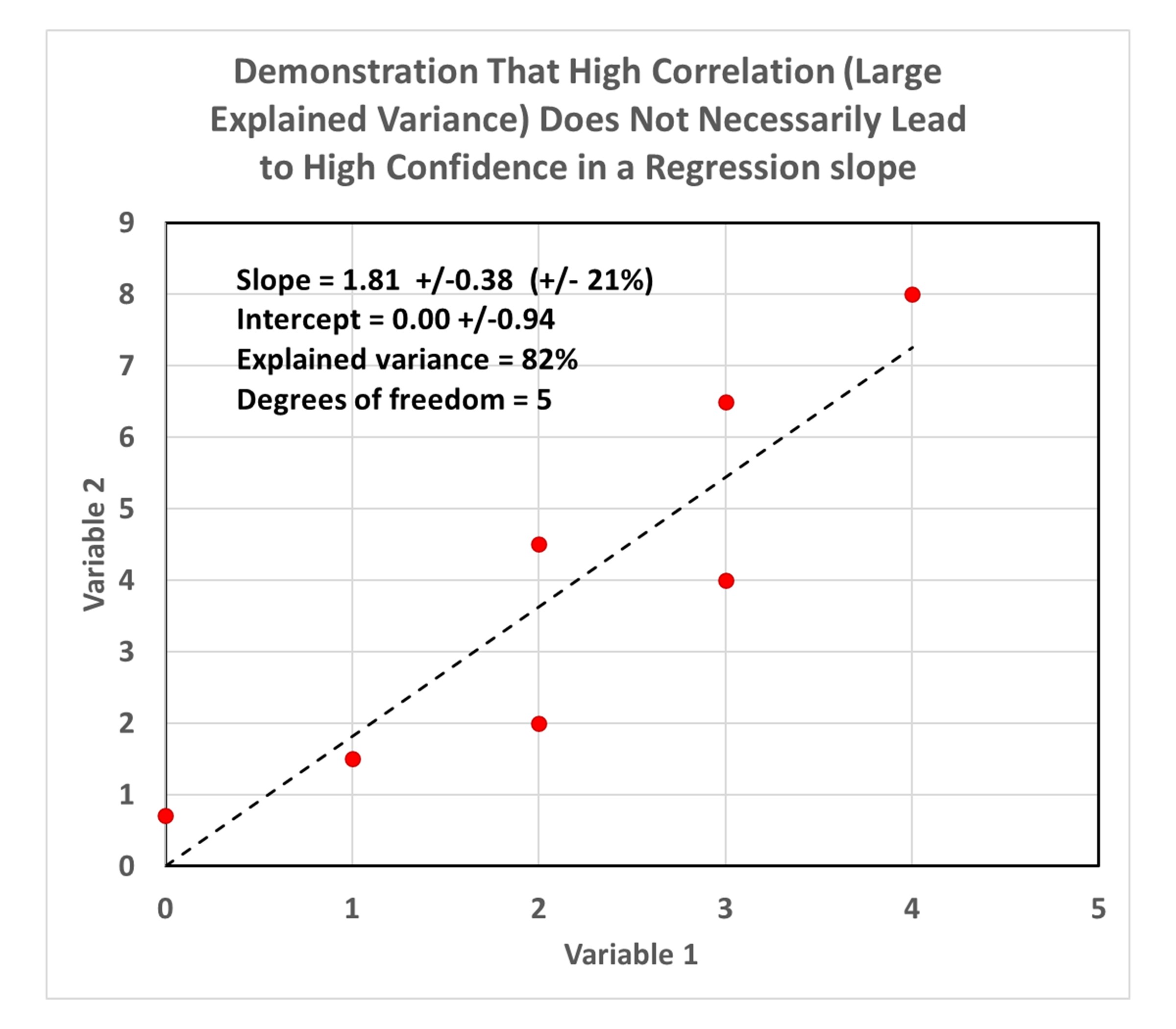

A Simple Example: High Correlation, But Low Confidence In the Regression Slope

The following plot of a small amount of data I created shows what looks like a pretty strong linear relationship between 2 variables, with a regression explained variance of 82% (correlation coefficient of 0.91).

But because there are so few data points, there is large statistical uncertainty in the resulting diagnosed regression slope (21% uncertainty), as well as the regression intercept (which is diagnosed as 0.0, but with an uncertainty of +/- 0.94).

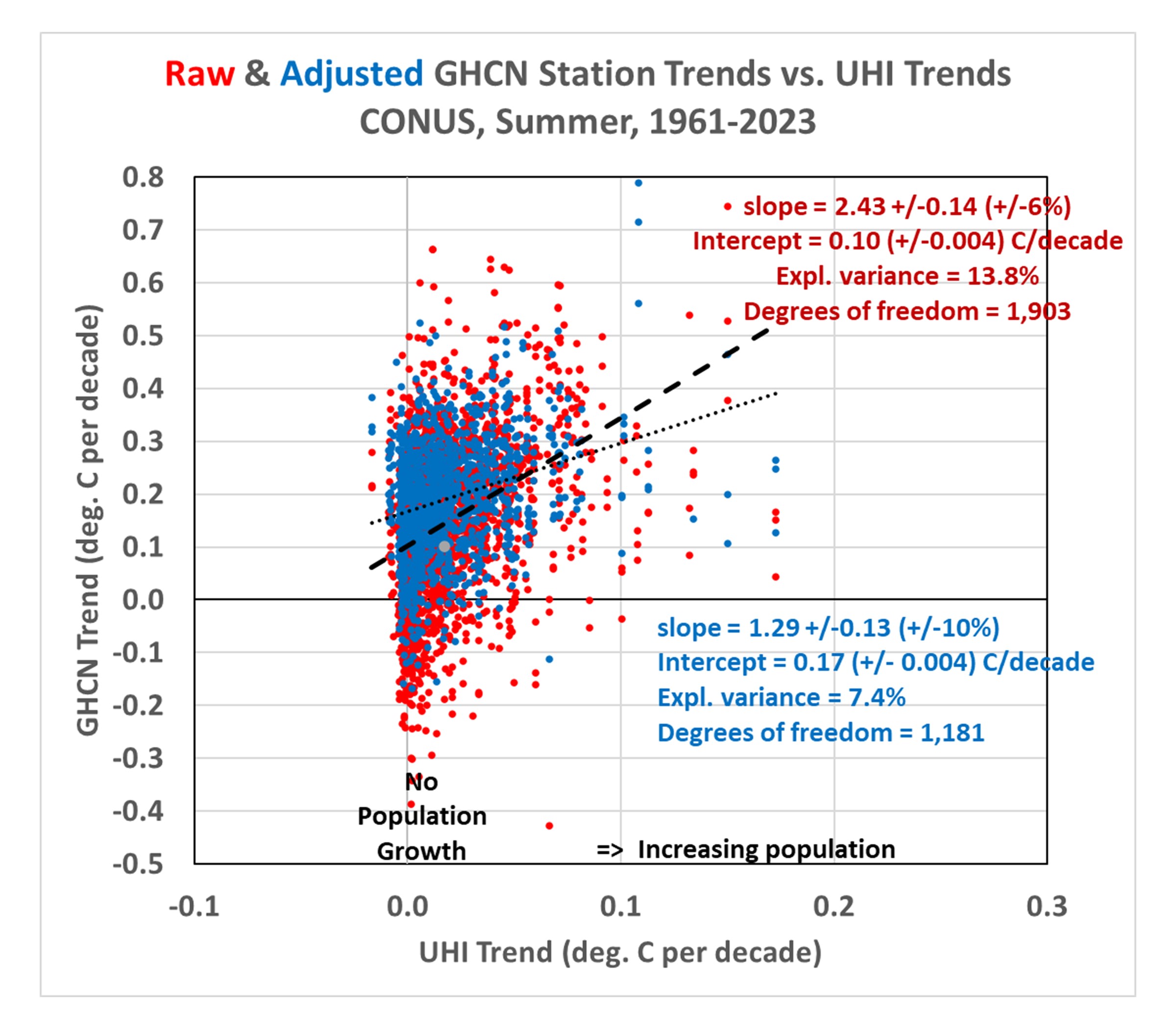

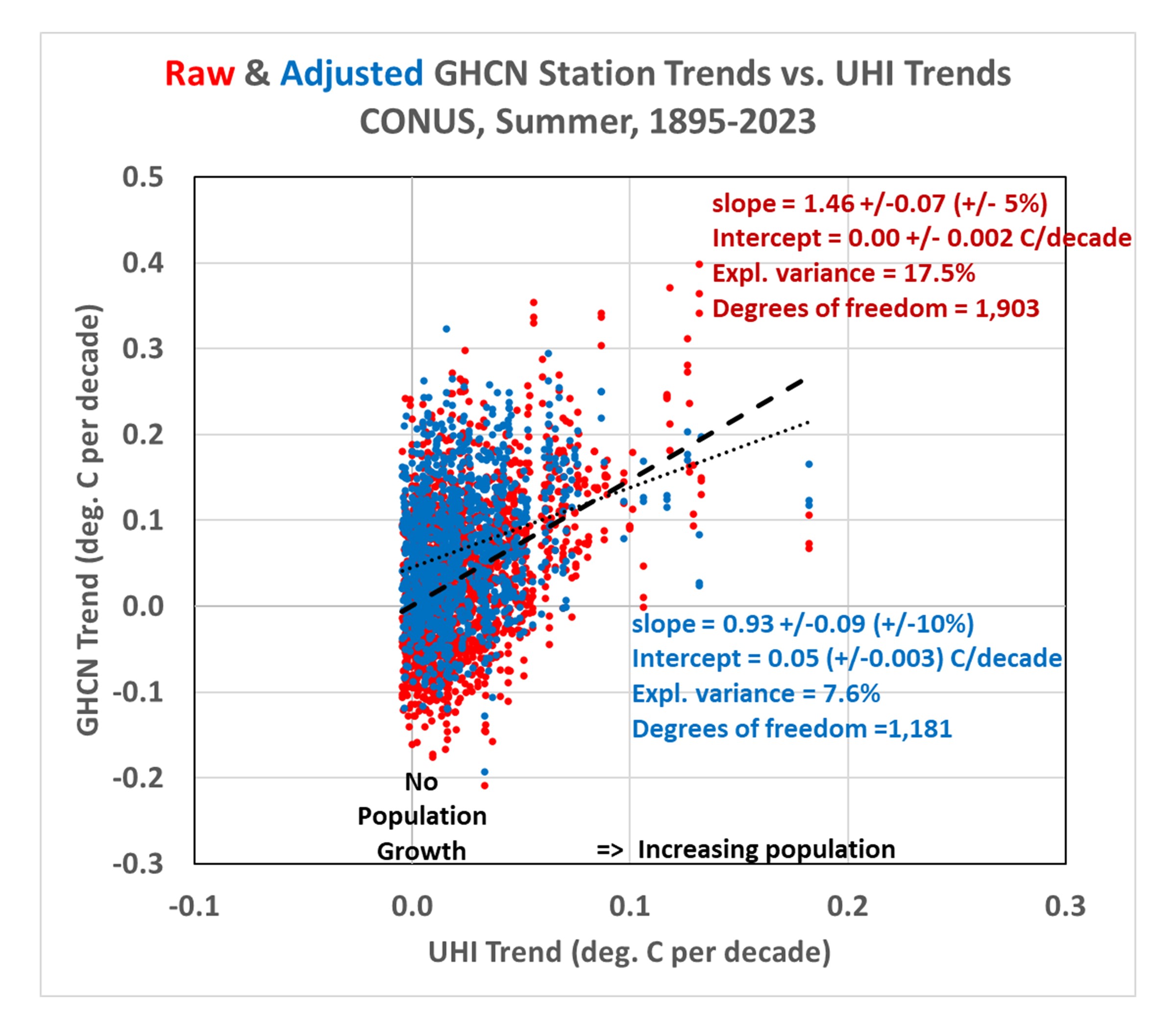

Now let’s look at the third data plot from my previous blog post, which demonstrated that there is UHI warming in not only the raw GHCN data, but in the homogenized data as well:

Importantly, even though the regression explained variances are rather low (17.5% for the raw data, 7.6% for the adjusted data), the confidence in the regression slopes is quite high (+/-5% for the raw GHCN regressions, and +/-10% for the homogenized GHCN regressions). Confidence is also high in the regression intercepts (+/-0.002 C/decade for the raw GHCN data, +/-0.003 C/decade for the homogenized GHCN data).

Compare these to the first plot above containing very few data points, which had a very high explained variance (82%) but a rather uncertain regression slope (+/- 21%).

The points I was making in my previous blog post depended upon both the regression slopes and the regression intercepts. The positive slopes demonstrated that the greater the population growth at GHCN stations, the greater the warming trend… not only in the raw data, but in the homogenized data as well. The regression intercepts of zero indicated that the data, taken as a whole, suggested zero warming trend (1895-2023) if the stations had not experienced population growth.

But it must be emphasized that these are all-station averages for the U.S., not area averages. It is “possible” that there has (by chance) actually been more climate warming at the locations where there has been more population growth. So it would be premature to claim there has been no warming trend in the U.S. after taking into account spurious UHI warming effects. I also showed that there has been warming if we look at more recent data (1961-2023).

But the main point of this post is to demonstrate that low correlations between two dataset variables do not necessarily mean low confidence in regression slopes (and intercepts). The confidence intervals also depend upon how much data are contained in the dataset.

Home/Blog

Home/Blog