See, I told you so.

One of the most fundamental requirements of any physics-based model of climate change is that it must conserve mass and energy. This is partly why I (along with Danny Braswell and John Christy) have been using simple 1-dimensional climate models that have simplified calculations and where conservation is not a problem.

Changes in the global energy budget associated with increasing atmospheric CO2 are small, roughly 1% of the average radiative energy fluxes in and out of the climate system. So, you would think that climate models are sufficiently carefully constructed so that, without any global radiative energy imbalance imposed on them (no “external forcing”), that they would not produce any temperature change.

It turns out, this isn’t true.

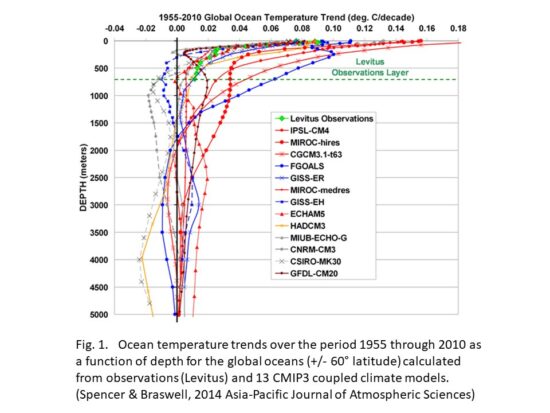

Back in 2014 our 1D model paper showed evidence that CMIP3 models don’t conserve energy, as evidenced by the wide range of deep-ocean warming (and even cooling) that occurred in those models despite the imposed positive energy imbalance the models were forced with to mimic the effects of increasing atmospheric CO2.

Now, I just stumbled upon a paper from 2021 (Irving et al., A Mass and Energy Conservation Analysis of Drift in the CMIP6 Ensemble) which describes significant problems in the latest (CMIP5 and CMIP6) models regarding not only energy conservation in the ocean but also at the top-of-atmosphere (TOA, thus affecting global warming rates) and even the water vapor budget of the atmosphere (which represents the largest component of the global greenhouse effect).

These represent potentially serious problems when it comes to our reliance on climate models to guide energy policy. It boggles my mind that conservation of mass and energy were not requirements of all models before their results were released decades ago.

One possible source of problems are the model “numerics”… the mathematical formulas (often “finite-difference” formulas) used to compute changes in all quantities between gridpoints in the horizontal, levels in the vertical, and from one time step to the next. Miniscule errors in these calculations can accumulate over time, especially if physically impossible negative mass values are set to zero, causing “leakage” of mass. We don’t worry about such things in weather forecast models that are run for only days or weeks. But climate models are run for decades or hundreds of years of model time, and tiny errors (if they don’t average out to zero) can accumulate over time.

The 2021 paper describes one of the CMIP6 models where one of the surface energy flux calculations was found to have missing terms (essentially, a programming error). When that was found and corrected, the spurious ocean temperature drift was removed. The authors suggest that, given the number of models (over 30 now) and number of model processes being involved, it would take a huge effort to track down and correct these model deficiencies.

I will close with some quotes from the 2021 J. of Climate paper in question.

“Our analysis suggests that when it comes to globally integrated OHC (ocean heat content), there has been little improvement from CMIP5 to CMIP6 (fewer outliers, but a similar ensemble median magnitude). This indicates that model drift still represents a nonnegligible fraction of historical forced trends in global, depth-integrated quantities…”

“We find that drift in OHC is typically much smaller than in time-integrated netTOA, indicating a leakage of energy in the simulated climate system. Most of this energy leakage occurs somewhere between the TOA and ocean surface and has improved (i.e., it has a reduced ensemble median magnitude) from CMIP5 to CMIP6 due to reduced drift in time-integrated netTOA. To put these drifts and leaks into perspective, the time-integrated netTOA and systemwide energy leakage approaches or exceeds the estimated current planetary imbalance for a number of models.“

“While drift in the global mass of atmospheric water vapor is negligible relative to estimated current trends, the drift in time-integrated moisture flux into the atmosphere (i.e., evaporation minus precipitation) and the consequent nonclosure of the atmospheric moisture budget is relatively large (and worse for CMIP6), approaching/exceeding the magnitude of current trends for many models.”

Home/Blog

Home/Blog

:quality(70)/arc-anglerfish-arc2-prod-tronc.s3.amazonaws.com/public/H2EEN5AA27KWT263WRDBEOZRLM.jpg)