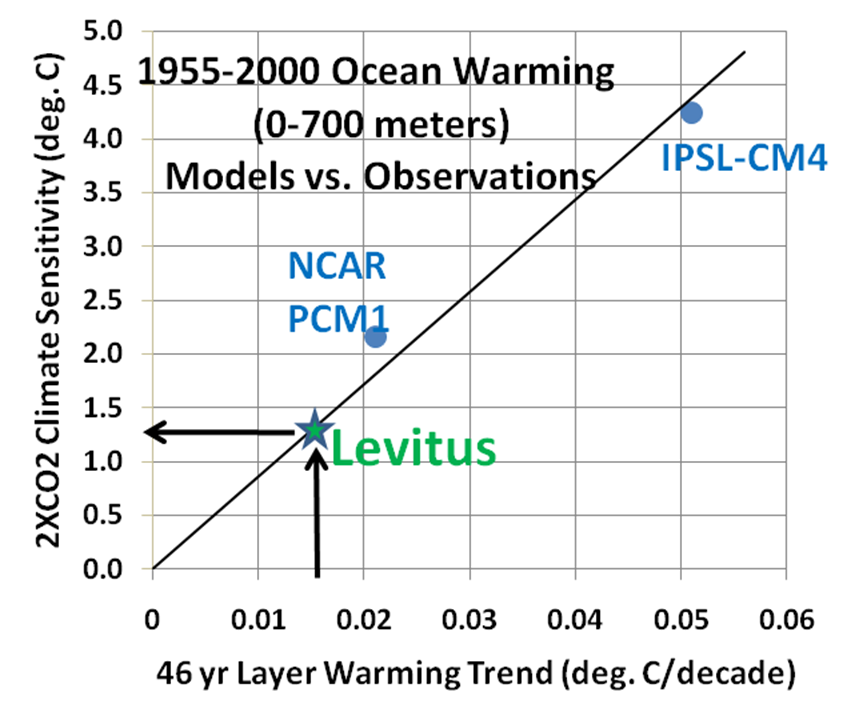

The following comparison between the 20th Century runs from most (15) of the IPCC AR4 climate models, and Levitus observations of ocean warming during 1955-1999, further bolsters the case for a relatively low climate sensitivity: estimated here to be about 1.3 deg. C for a future doubling of atmospheric CO2. This is quite a bit lower than the IPCC’s best estimate of 3 deg. C warming.

But the behavior of the models’ temperatures in the deep ocean was not at all what I expected. They say “too many cooks spoil the broth”. Well, it looks like 15 climate modeling groups make spaghetti out of ocean temperature trends.

Deep Ocean Spaghetti

The deep-ocean temperature trends in the 15 models which had complete ocean data archived for the period 1955-1999 are surprising, because I expected to see warming at all depths. Instead, the models exhibit wildly different behaviors, with deep-ocean cooling just as likely as warming depending upon the model and ocean layer in question (click for the full-size version):

Three of the models actually produced average cooling of the full depth of the oceans while the surface warmed, which seems physically implausible to say the least. More on that in a minute.

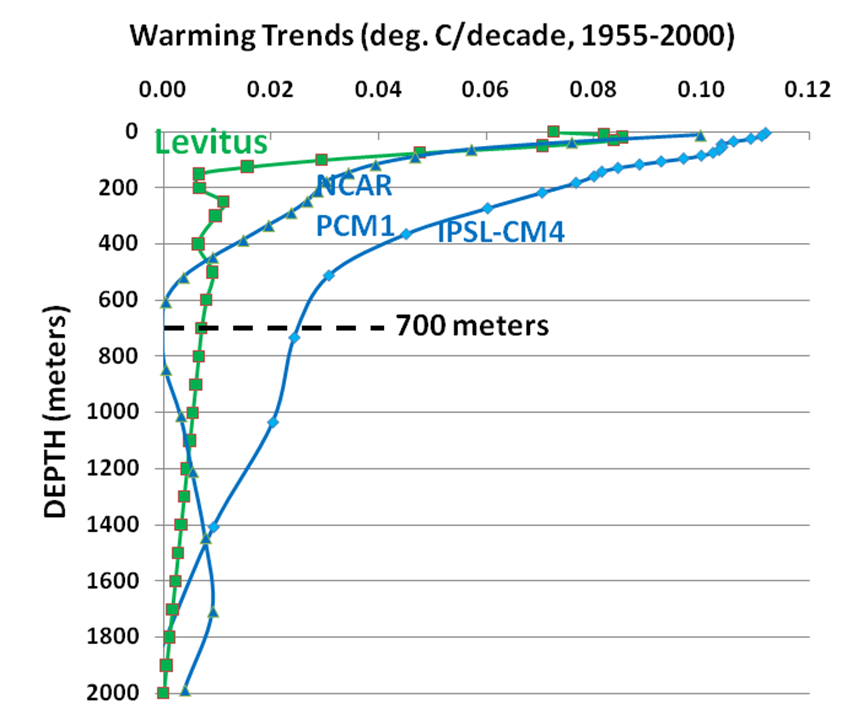

The most common difference between the models and the observations down to 700 m (the deepest level for which we have Levitus observations to compare to) is that the models tend to warm the ocean too much. Of those models that don’t, almost all produce unexpected cooling below the mixed layer (approximately the top 100 m of the ocean).

From what I understand, the differences between the various models’ temperature trends are due to some combination of at least 3 processes:

1) CLIMATE SENSITIVITY: More sensitive models should store more heat in the ocean over time; this is the relationship we want to exploit to estimate the sensitivity of the real climate system from the rates of observed warming compared to the rates of warming in the climate models.

2) CHANGES IN VERTICAL MIXING OVER TIME: The deep ocean is filled with cold, dense water formed at high latitudes, while the upper layers are warmed by the sun. Vertical mixing acts to reduce that temperature difference. Thus, if there is strengthening of ocean mixing over time, there would be deep warming and upper ocean cooling, as the vertical temperature differential is reduced. On the other hand, weakening mixing over time would do the opposite, with deep ocean cooling and upper ocean warming. These two effects, which can be seen in a number of the models, should cancel out over the full depth of the ocean.

3) SPURIOUS TEMPERATURE TRENDS IN THE DEEP OCEAN This is a problem that the models apparently have not fully addressed. Because it takes about ~1,000 years for the ocean circulation to overturn, it takes a very long run of a climate model before the model’s deep ocean settles into a stable temperature, say to 0.1 deg. C or less. While some knowledgeable expert reading this might want to correct me, it appears to me that some of these models have spurious temperature trends, unrelated to the CO2 (and other) forcings imposed on the models during 1955-1999. This is likely due to insufficient “spin-up” time for the model to reach a stable deep-ocean temperature. Until this problem is fixed, I don’t see how models can address the extent to which the extra heat from “global warming” is (or isn’t) being mixed into the deep ocean. Maybe the latest versions of the climate models, which will be archived in the coming months, do a better job.

Of course, we would like to exploit process (1) to get an estimate of how sensitive the real climate system is, using the Levitus observations (green curve in the above plot). Unfortunately, the 2nd and 3rd processes causing temperature trends unrelated to upper ocean warming seem to be a real problem in most of these models.

Choosing the Best Subset of Models

Obviously, using models that produce a global net cooling of the oceans during a period of surface warming (1955-1999) cannot be expected to provide a useful comparison to observations. Maybe someone can correct me, but it appears those models do not even conserve energy.

For quantitative comparison to the Levitus observations, I decided to accept only those models whose monthly mixed layer temperature variations during 1955-1999 had at least a 0.7 correlation with the Levitus observations in the 0-50 m layer over the same period. In other words, I omitted the models that behaved least like the observations.

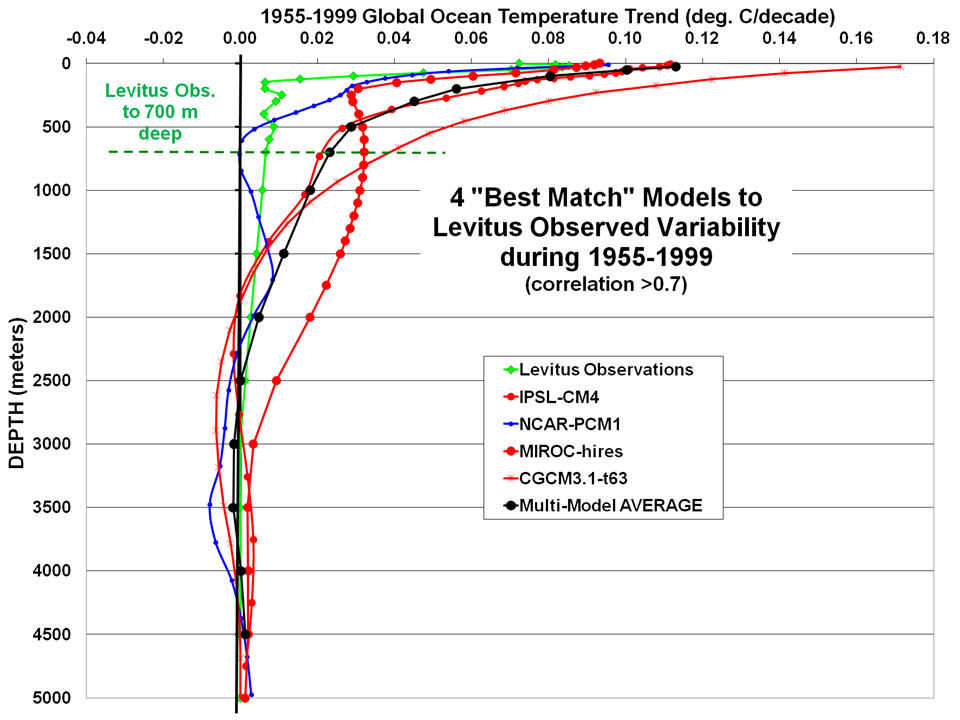

The remaining 4 models’ trends are shown in the next plot, along with their average (click for large version):

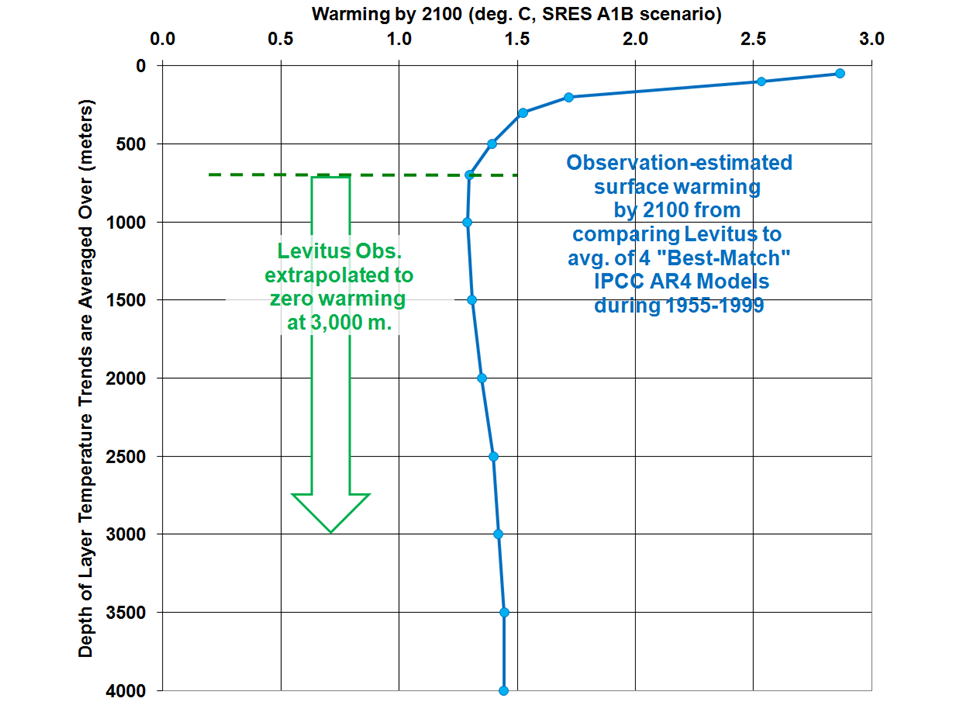

Note that the 4-model average (black curve) shows warming down to about 2,500 meters depth. Now that we have excluded the models that are the most unrealistic, we can make a more meaningful quantitative comparison between the Levitus observations (which I have extrapolated to zero warming at 3,000 m depth) and the models.

Estimating Climate Sensitivity from the Levitus Observations

As reported by Forster & Taylor (2006 J. Climate), the average surface warming produced by 2100 in these 4 models (based upon the SRES A1B emissions scenario) is 3.9 deg. C. If we scale that by the smaller amount of warming seen in the observations, you get the following plot for estimated warming by 2100 based upon averaging of the trends to various depths (click for large version):

While the Levitus observations in the mixed layer are consistent with the model predictions of about 3 deg. C of warming by 2100, the much weaker warming in the entire 0-700 m layer suggests a much lower rate of future warming: about 1.3 deg. C or so.

If I loosen the requirements on the number of models accepted, and include 9 (rather than 4) out of 15, the predicted rate of warming increases a little, to 1.5 deg. C.

Discussion

Since this result uses the IPCC models themselves to “calibrate” the Levitus observations, it cannot be faulted for using a model that is “too simple”. To the extent the average IPCC model mimics nature, the models themselves suggest the relatively weak ocean warming observed during 1955-1999 indicates relatively low climate sensitivity.

Previous investigators (as well as the IPCC AR4 report) have claimed that warming of the oceans is “consistent with” anthropogenic forcing of the climate system. But they did not say exactly how consistent, in a quantitative sense.

The IPCC AR4 report admits that the climate models tend to warm the deep ocean too much, but the report puts error bars on both the models and observations which overlap each other, so then it can be claimed the overlap is evidence of “agreement” between models and observations.

The trouble with the ‘overlapping error bars’ argument – which seems to be a favorite statistical ploy of the IPCC – is that it cuts both ways: the other ends of the error bars, where there is no overlap, will suggest even larger disagreement between models and observations than if no error bars were used in the first place!

Nothing is gained with the statistical obfuscation of using overlapping error bars…except to demonstrate that the models and observations do not necessarily disagree.

That is very different from saying they do agree.

THE BOTTOM LINE

It would be difficult to overstate the importance of the results, if they hold up to scrutiny: The observed rate of warming of the ocean has been too weak to be consistent with a sensitive climate system. This is demonstrated with the IPCC models themselves.

The resulting climate sensitivity (around 1.3 deg. C) just happens to be about the same sensitivity I have been getting using the simple forcing-feedback-diffusion model to match the Levitus observations of ocean warming during 1955-2010 directly.

Finally, it should be mentioned the above analysis assumes that there has been no significant natural source of warming during 1955-1999. If there has, then the diagnosed climate sensitivity would be even lower still.

I would be surprised if none of the climate modelers have performed a basic analysis similar to the one above. But since the results would be so damaging to the IPCC’s claims of much greater climate sensitivity (greater future warming) I would expect those results would never see the light of day.

Home/Blog

Home/Blog