“The American Geophysical Union plans to announce that 700 researchers have agreed to speak out on the issue. Other scientists plan a pushback against congressional conservatives who have vowed to kill regulations on greenhouse gas emissions.”

A new article in the LA Times says that the American Geophysical Union (AGU) is enlisting the help of 700 scientists to fight back against a new congress that is viewed as a bunch of backwoods global warming deniers who are standing in the way of greenhouse gas regulations and laws required to same humanity from itself.

Scientific truth, after all, must prevail. And these scientists apparently believe they have been endowed with the truth of what has caused recent warming.

The message just hasn’t gotten across.

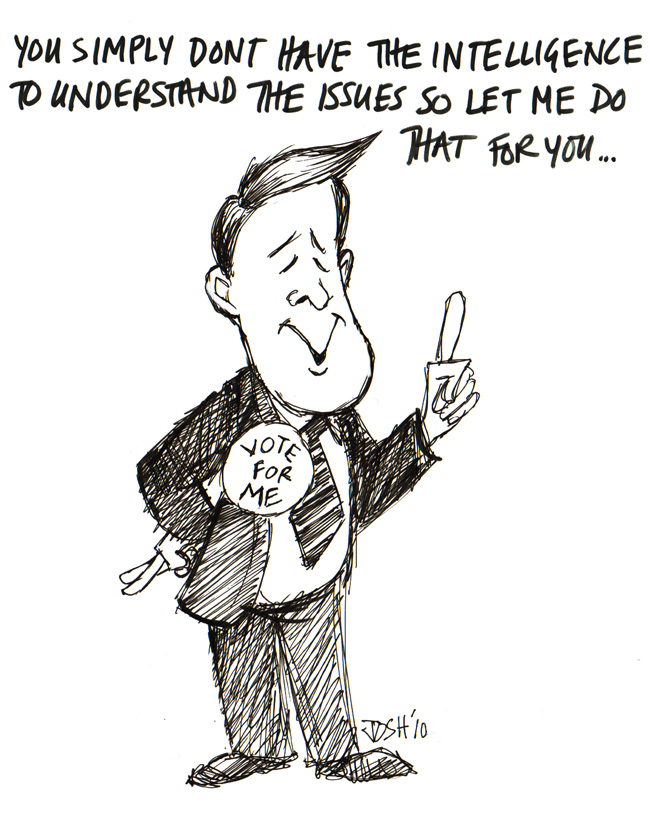

We skeptics are not smart enough to understand the science. We and the citizens of America, and the representatives we have just elected to go to Washington, just need to listen to them and let them tell us how we should be allowed to live.

OK, so, let me see if I understand this.

After 20 years, billions of dollars in scientific research and advertising campaigns, cooperation from the public schools, TV specials and concerts by a gaggle of entertainers, end-of-the-world movies, our ‘best’ politicians, heads of state, presidents, the United Nations, and complicity by most of the news media, it has been decided that the American public is not getting the message on global warming!?

Are they serious!?

Americans — hell, most of humanity — have already heard the 20 different ways we will all die miserable deaths from our emissions of that life giving — er, I mean poisonous –gas, carbon dioxide, that we are adding to the atmosphere every day.

So, NOW it no more mister nice guy? Give me a break.

Finally Time for a REAL Debate?

Actually, this announcement is a good thing. There has been a persistent refusal on the part of the elitist, group-think, left-leaning class of climate scientists to even debate the global warming issue in public. Maybe they have considered it beneath themselves to debate those of us who are clearly wrong on the global warming issue.

A complaint many of us skeptics have had for years is that those who constitute the “scientific consensus” (whatever that means) will not engage in public debates on global warming. Al Gore won’t even answer questions from the press.

This is why you will mostly hear only politicians and U.N. bureaucrats give pronouncements on the science. They are already adept at weaving a good story with carefully selected facts and figures.

Why has the global warming message been presented mostly by politicians and bureaucrats up until now? Probably because it is too dangerous to put their scientists out there.

Scientists might admit to something counterproductive — like uncertainty — which would jeopardize what the politicians have been trying to accomplish for decades — control over energy, which is necessary for everything that humans do.

Scientists Ready to Enter the Lion’s Den

The LA Times articles goes on to explain how there will be “scientists prepared to go before what they consider potentially hostile audiences on conservative talk radio and television shows.”

Gee, how brave of them.

Kind of like when I went up against Henry Waxman? Or Barbara Boxer?

I can sympathize with Republican’s desire to have hearings to investigate how your tax dollars have been spent on this issue. But I will guarantee that if such hearings are held, the news media will make it sound like Galileo is being tried all over again.

As if climate scientists are objective seekers of the truth. I hate to break it to you, but scientists are human. Well..most of us are, anyway.

Most have strong personal, quasi-religious views of the role of humans in the natural world, and this inevitably guides how they interpret measurements of the climate system. Especially the young ones who have been indoctrinated on the subject.

Those few of us who are publishing climate researchers and who are willing to take the risk of speaking out on the biased science on this issue are now late in our careers, and we have seen the climate research field be transformed from one where “climate change” used to necessarily imply natural climate change, to one where nature does not have the power to cause its own change — only mankind does.

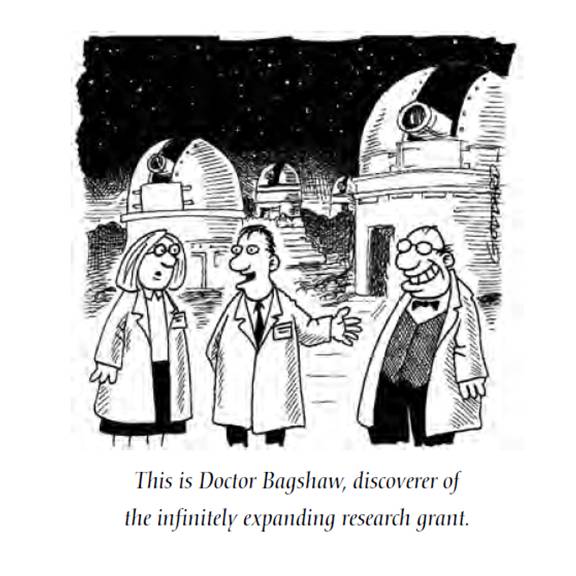

I have repeatedly pointed out how virtually all global warming research funds either (1) build the case for humanity as the primary cause of recent warming, or (2) simply assume humans are the cause.

Virtually NO funding has supported research into the possibility that warming might be mostly part of a natural climate cycle. And if you give scientists enough money to find something, they will do their best to find it.

Politicians have orchestrated and guided this effort from the outset, and scientists like to believe they are helping to Save the Earth when they participate in global warming research.

Anthropogenic Global Warming is a Hypothesis, Nothing More

What the big-government funded climate science community has come up with is a plausible hypothesis which is being passed off as a proven explanation.

Science advances primarily by searching for new and better explanations (hypotheses) for how nature works. Unfortunately, this basic task of science has been abandoned when it comes to explaining climate change.

About the only alternative explanation they have mostly ruled out is an increase in the total output of the sun.

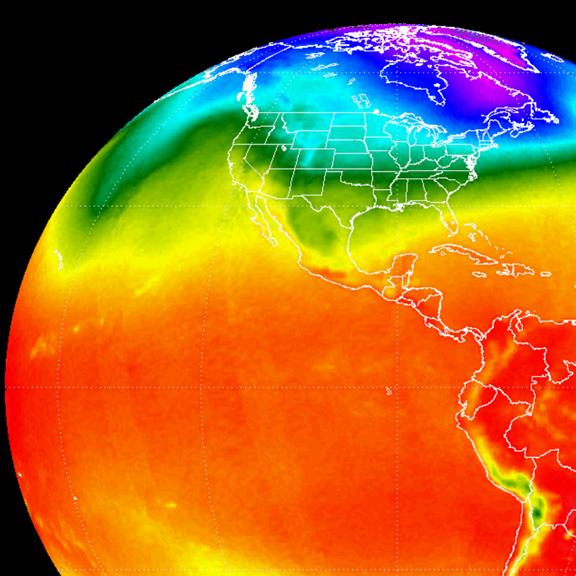

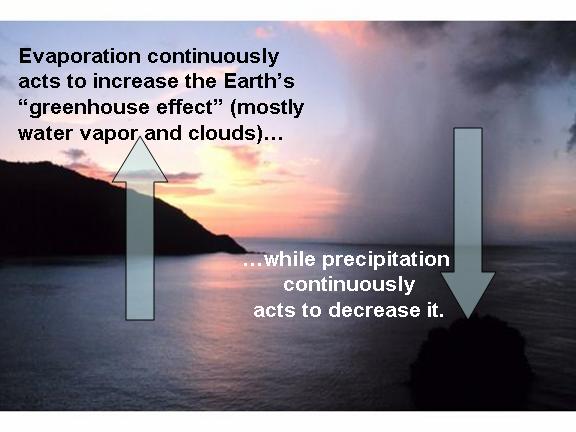

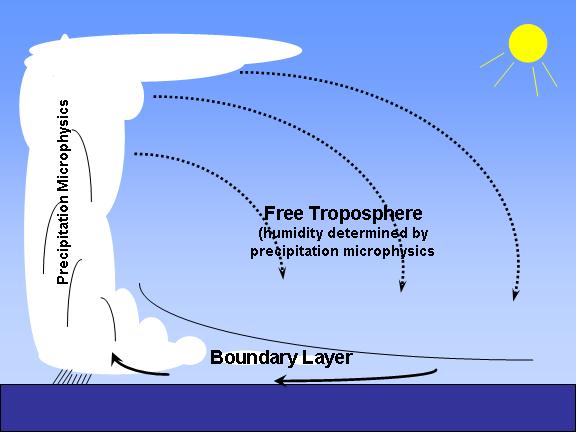

The possibility that small changes in ocean circulation have caused clouds to let in more sunlight is just one of many alternative explanations which are being ignored.

Not only have natural, internal climate cycles been ignored as a potential explanation, some researchers have done their best to revise climate history to do away with events such as the Medieval Warm Period and Little Ice Age. This is how the ‘hockey stick’ controversy got started.

If you can get rid of all evidence for natural climate change in Earth’s history, you can make it look like no climate changes happened until humans (and cows) came on the scene.

Bring It On

I look forward to the opportunity to debate a scientist from the other side who actually knows what they are talking about. I’ve gone one-on-one with some speakers who so mangled the consensus explanation of global warming that I had to use up half my speaking time cleaning up the mess they made.

Those few I have debated in a public forum who know what they are talking about are actually much more reserved in their judgment on the subject than those who the pop culture presents to us.

But for those newbie’s who want to enter the fray, I have a couple of pieces of advice on preparation.

First, we skeptics already know your arguments …it would do you well to study up a little on ours.

And second, those of us who have been at this a long time actually knew Galileo. Galileo was a good friend of ours. And you are no Galileo.

|

Home/Blog

Home/Blog