[NOTE: What follows assumes the direct (no-feedback) infrared radiative effects of greenhouse gases (water vapor, CO2, methane, etc.) on the Earth’s radiative budget are reasonably well understood. If you want to challenge that assumption, your time might be better spent here.]

I was recently asked by a reader to comment on a new paper by Schmidt et al. which put some numbers behind the common question, What fraction of the Earth’s greenhouse effect is due to carbon dioxide?

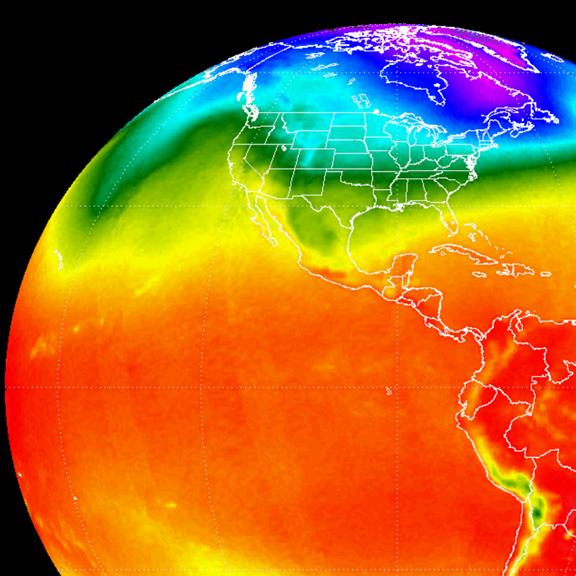

There are a wide variety of answers to this question depending on how you define “greenhouse effect”, what your assumed baseline is, etc. Conceptually, in any greenhouse atmosphere, greenhouse gases warm the lower layers and cool the upper layers compared to if those gases were not present. That never changes. It’s the way you compute the relative magnitude (say, in percent) of that warming that depends greatly upon your assumptions.

Note that the greenhouse effect can only be calculated based upon theory. The greenhouse effect isn’t a physical variable like temperature that you can measure. It is a radiative process that affects the atmosphere’s energy budget at all altitudes, warms the surface, and whose components must be calculated based upon radiative transfer theory and the IR absorption characteristics of greenhouse gases (and clouds).

The Wrong Question

I will argue that if what we are REALLY interested in is how much the Earth’s greenhouse effect will be enhanced by adding CO2 to the atmosphere (the only reason we are interested in the CO2 issue anyway, right?), then the above question is not very relevant.

In fact, the answer to it can totally mislead us. This is easy to show with 2 simple examples.

First, assume there was NO naturally occurring carbon dioxide in the atmosphere, and we added 300 ppm. In that case, the natural influence of CO2 on the Earth’s greenhouse effect would be zero, but the influence of adding 300 ppm would be quite significant.

Now, as the second example let’s assume the natural CO2 concentration is high, say 1,000 ppm, and THEN we added 300 ppm. In this second case, the natural role of CO2 in the Earth’s greenhouse effect would be very significant, but our addition of 300 ppm more would have a relatively small direct warming influence.

This is because the more CO2 there is in the atmosphere, the more “saturated” the CO2-portion of the greenhouse effect becomes, a well known feature that has a standard simplified, logarithmic formula for its computation.

Everyone already knows about this mostly saturated condition relative to the radiative effect of carbon dioxide – even the IPCC. Adding more and more CO2 causes incrementally less and less warming (again, assuming no feedback, which is a separate issue)….but the radiative effect of CO2 in the atmosphere is not totally saturated.

And it never can be, for the same reason that you can keep dividing a number by two forever, and the resulting number will get extremely small…but it will never reach zero.

So what do these two examples tell us? If the natural contribution of CO2 to the greenhouse effect was ZERO, then the warming effect of our addition of 300 ppm would be relatively large. But if the natural contribution of CO2 to the greenhouse effect was already large, then the incremental warming effect of adding more will be small.

An extreme example would be Venus, which has 230,000 times as much CO2 in its atmosphere as Earth does. Our addition of CO2 to that atmosphere would have essentially no effect.

The point is that knowing what percentage of the Earth’s natural greenhouse effect comes from carbon dioxide alone tells us little of use in determining how much warming might result from adding more CO2 to the atmosphere.

How Much is the Earth’s Greenhouse Effect Enhanced by Adding More CO2?

This is the question we should be asking, and it can be easily answered with a couple of numbers quoted in the Schmidt et al. article.

Schmidt et al. assumes the commonly quoted 33 deg. C as the amount of surface warming due to the Earth’s greenhouse effect, and for the time being I will assume the same. (In my next blog post, I will explain why this number is NOT a good measure of the Earth’s greenhouse effect.)

Thirteen years ago, Danny Braswell and I did our own calculations to explore the greenhouse effect with a built-from-scratch radiative transfer model, incorporating the IR radiative code developed by Ming Dah Chou at NASA Goddard. The Chou code has also been used in some global climate models.

We calculated, as others have, a direct (no feedback) surface warming of about 1 deg. C as a result of doubling CO2 (“2XCO2”).

So, this immediately gives us numbers we can use to compute a percentage increase in the greenhouse effect: Doubling of atmospheric CO2 (which will probably happen by late in this century) enhances the Earth’s greenhouse effect by about (1/33=) 3%.

This value (3%) for the enhancement of the Earth’s greenhouse effect from our addition of CO2 is much smaller than the 20% value that Schmidt et al. get…but remember that we are addressing two different issues. I claim what we should be interested in is the relative size of our enhancement of the greenhouse effect, rather than how much of the Earth’s natural greenhouse effect is due to CO2. The latter question really proves nothing about how much effect adding MORE CO2 to the atmosphere will have.

Next Time: Why 33 deg. C is a Misleading Number

In my next post, I will discuss why the use of 33 deg. C for surface warming due to the greenhouse effect is very misleading. The issue is not new, as it has been known since the 1960s. I wasn’t aware of its central importance to the global warming debate until Dick Lindzen published his 1990 paper, Some Coolness Concerning Global Warming.

|

Home/Blog

Home/Blog