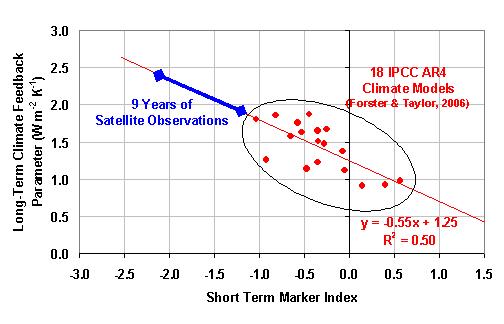

Despite the fact that the magnitude of anthropogenic global warming depends mostly upon the strengths of feedbacks in the climate system, there is no known way to actually measure those feedbacks from observational data.

The IPCC has admitted as much on p. 640 of the IPCC AR4 report, at the end of section 8.6, which is entitled “Climate Sensitivity and Feedbacks”:

“A number of diagnostic tests have been proposed…but few of them have been applied to a majority of the models currently in use. Moreover, it is not yet clear which tests are critical for constraining future projections (of warming). Consequently, a set of model metrics that might be used to narrow the range of plausible climate change feedbacks and climate sensitivity has yet to be developed.”

This is a rather amazing admission. Of course, since these statements are lost in a sea of favorable (but likely superfluous) comparisons between the models and various aspects of today’s climate system, one gets the impression that the 99% of the IPCC’s statements that are supportive of the climate models far outweighs the 1% that might cast doubt.

But the central importance of feedbacks to projections of future climate makes them by far more important to policy debates than all of the ways in which model behavior might resemble the current climate system. So, why has it been so difficult to measure feedbacks in the climate system? This question is not answered in the IPCC reports because, as far as I can tell, no one has bothered to dig into the reasons.

Rather unexpectedly, I have been asked to present our research results on this subject at a special session on feedbacks at the Fall AGU meeting in San Francisco in mid-December. In that short 15 minute presentation, I hope to bring some clarity to an issue that has remained muddied for too long.

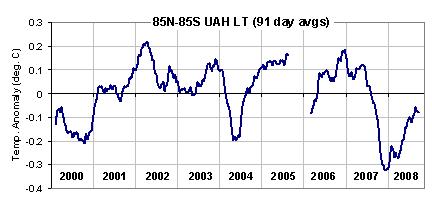

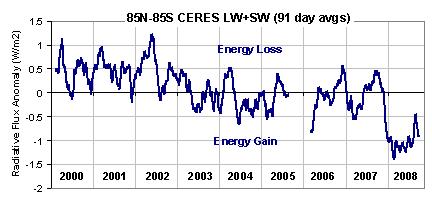

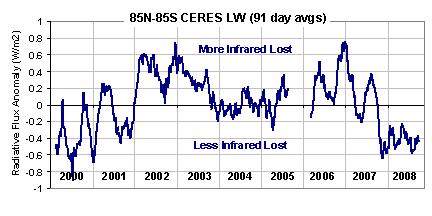

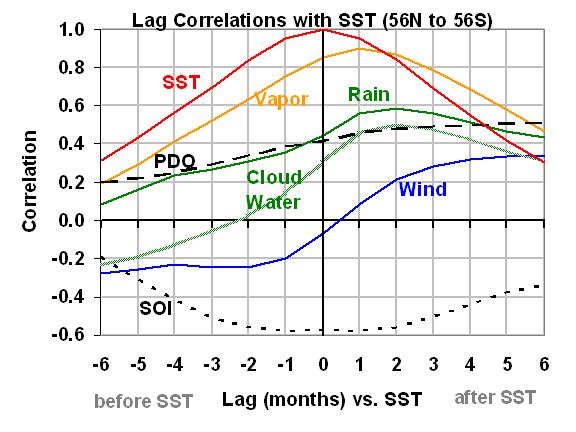

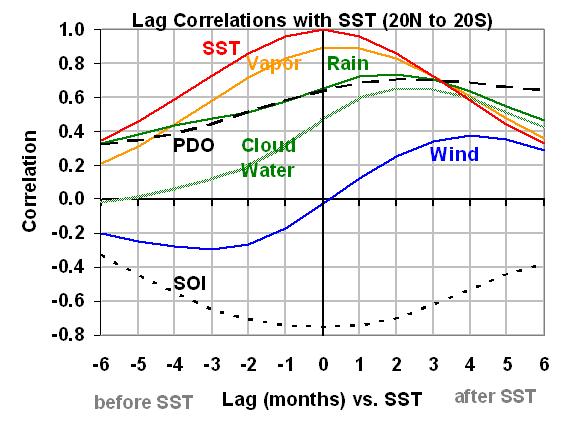

To review, the feedback measurement we are after can be defined as the amount of global average radiative change caused by a temperature change. The main reason for the difficulty in diagnosing the true feedbacks operating in the climate system is that the above definition of feedback is NOT the same as what we can actually measure from satellites, which is the amount of radiative change accompanied by a temperature change.

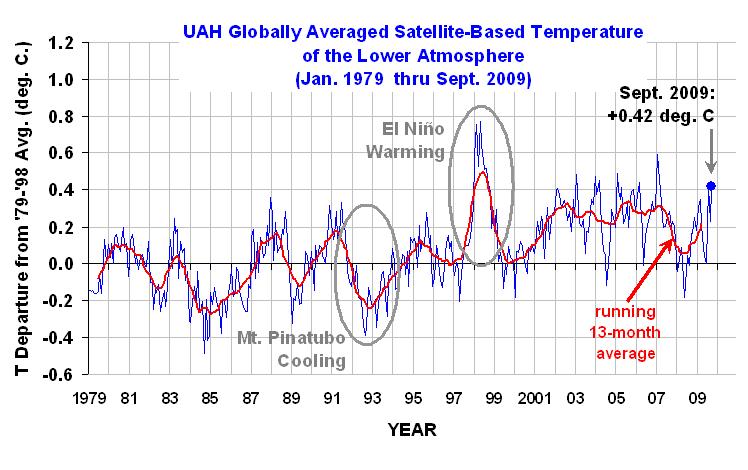

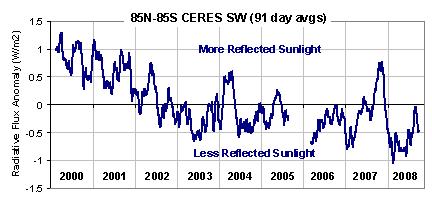

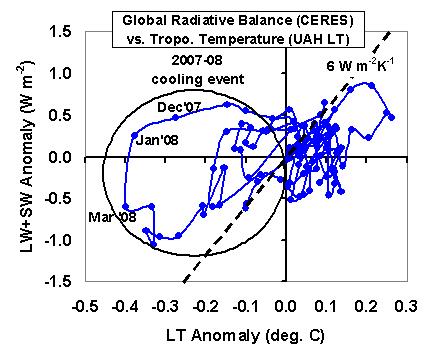

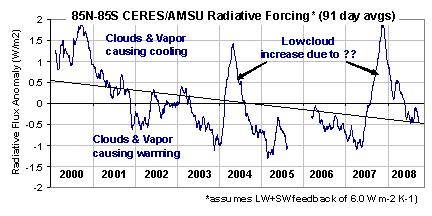

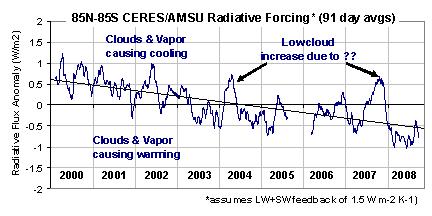

The distinction is that in the real world, causation in the opposite direction as feedback also exists in the measurements. Thus, a change in measured radiative flux results from some unknown combination of (1) temperature causing radiative changes (feedback), and (2) unforced natural radiative changes causing a temperature change (internal forcing).

The internal forcing does not merely add contaminating noise to the diagnosis of feedback – it causes a bias in the direction of positive feedback (high climate sensitivity). This bias exists primarily because forcing and net feedback (including the direct increase of IR radiation with temperature) always have opposite signs, so a misinterpretation of the sum of the two as feedback alone causes a bias.

For instance, for the global average climate system, a decrease in outgoing radiation causes an increase in global average temperature, whereas an increase in temperature must always do the opposite: cause an increase in outgoing radiation. As a result, the presence of forcing mutes the signature of net feedback. Similarly, the presence of feedback mutes the signature of forcing.

The effect of this partial cancellation is to result in diagnosed net feedbacks being smaller than what is actually occurring in nature, unless any forcing present is first removed from the data before estimating feedbacks. Unfortunately, we do not know which portion of radiative variability is forcing versus feedback, and so researchers have simply ignored the issue (if they were even aware of it) and assumed that what they have been measuring is feedback alone. As a result, the climate system creates the illusion of being more sensitive than it really is.

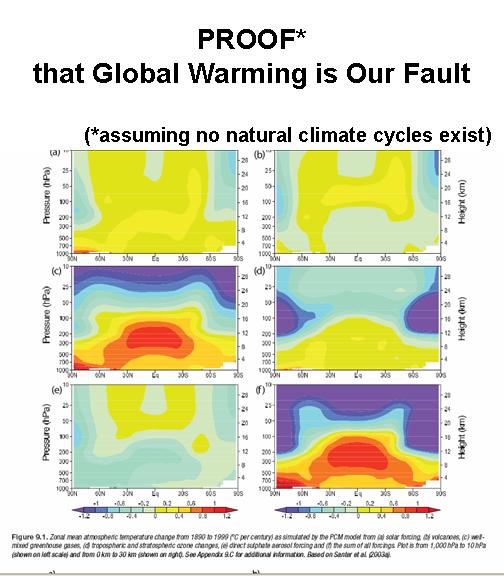

One implication of this is that it is not a sufficient test of the feedbacks in climate models to simply compare temperature changes to radiation changes. This is because the same relationship between temperature and radiation can be caused by either strong forcing accompanied by a large feedback parameter (which would be low climate sensitivity), or by weak forcing accompanied by a small feedback parameter (which would be high climate sensitivity).

Only in the case of radiative forcing being either zero or constant in time – situations that never happen in the real world – can feedback be accurately estimated with current methods.

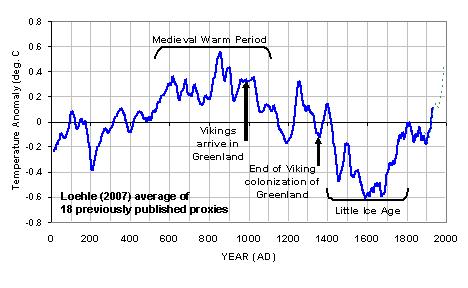

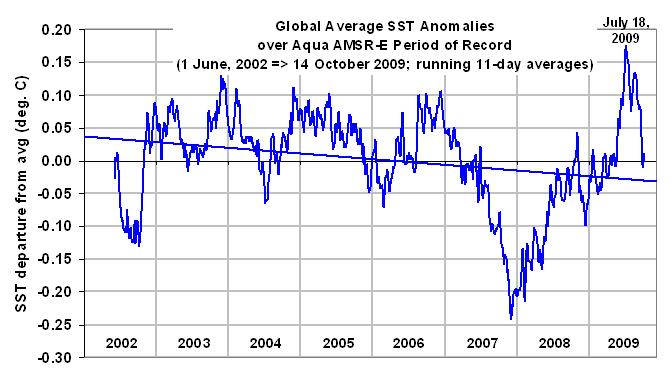

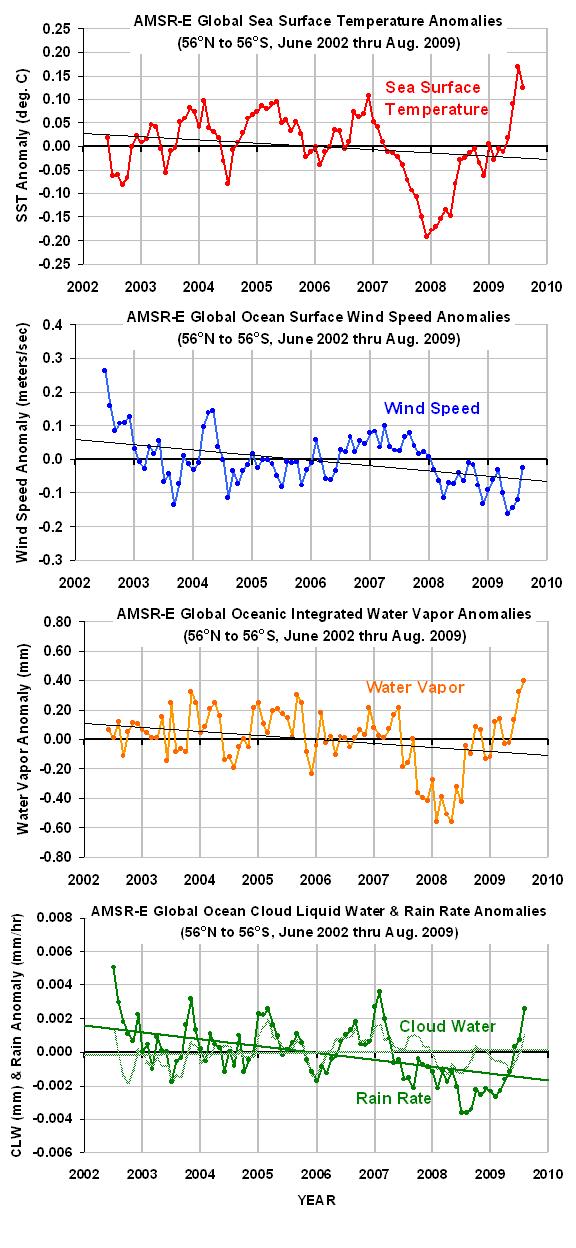

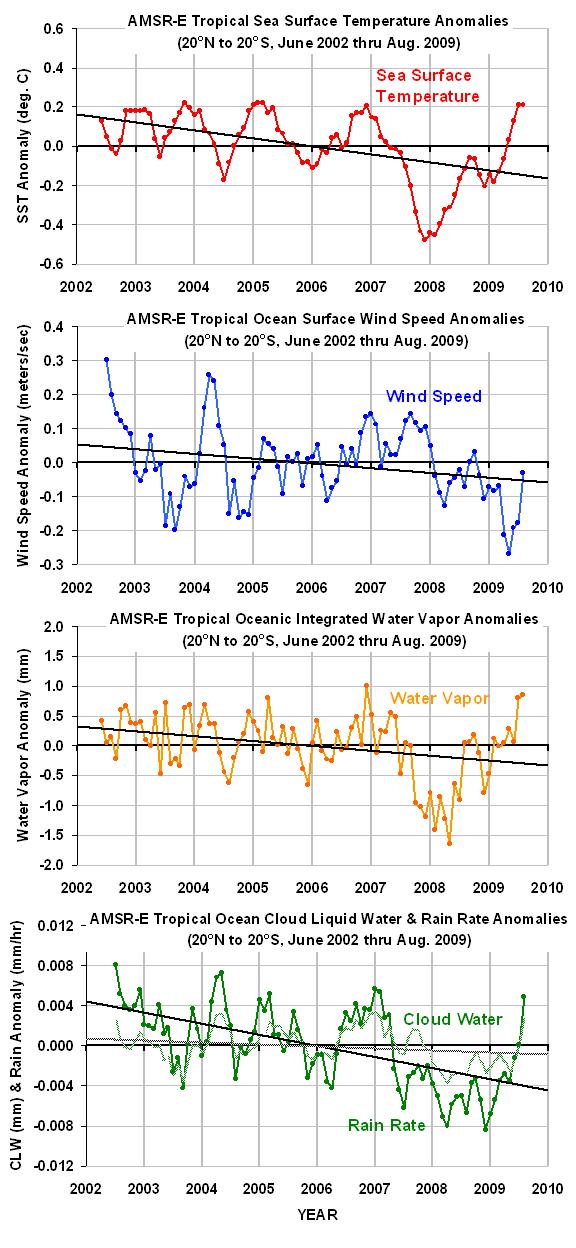

Our continuing analysis of satellite and climate model data has yet to yield a good solution to this problem. Unforced cloud changes in the climate system not only give the illusion of positive feedback, they might also offer a potential explanation for past warming (and cooling). [I believe these to be mostly chaotic in origin, but it also opens the door to more obscure (and controversial) mechanisms such as the modulation of cloud cover by cosmic ray activity.]

But without accurate long-term measurements of global cloud cover changes, we might never know to what extent global warming is simply a manifestation of natural climate variability, or whether cloud feedbacks are positive or negative. And without direct evidence, the IPCC can conveniently point to carbon dioxide change as the culprit. But this explanation seems rather anthropocentric to me, since it is easier for humans to keep track of global carbon dioxide changes than cloud changes.

Also, the IPCC can conveniently (and truthfully) claim that the behavior of their models is broadly “consistent with” the observed behavior of the real climate system. Unfortunately, this is then misinterpreted by the public, politicians, and policymakers as a claim that the amount of warming those models produce (a direct result of feedback) has been tested, which is not true.

As the IPCC has admitted, no one has yet figured out how to perform such a test. And until such a test is devised, the warming estimates produced by the IPCC’s twenty-something climate models are little more than educated guesses. It verges on scientific malpractice that politicians and the media continue to portray the models as accurate in this regard, without any objections from the scientists who should know better.

Home/Blog

Home/Blog