In a little over a week I will be giving an invited paper at the Fall meeting of the American Geophysical Union (AGU) in San Francisco, in a special session devoted to feedbacks in the climate system. If you don’t already know, feedbacks are what will determine whether anthropogenic global warming is strong or weak, with cloud feedbacks being the most uncertain of all.

In the 12 minutes I have for my presentation, I hope to convince as many scientists as possible the futility of previous attempts to estimate cloud feedbacks in the climate system. And unless we can measure cloud feedbacks in nature, we can not test the feedbacks operating in computerized climate models.

WHAT ARE FEEDBACKS?

To review, the main feedback issue is this: In response to the small direct warming effect of more CO2 in the atmosphere, will clouds change in ways that amplify the warming (e.g. a cloud reduction letting more sunlight in, which would be a positive feedback), or decrease the warming (e.g. a cloud increase causing less sunlight to be absorbed by the Earth, which would be a negative feedback)?

In the former case, we could be heading for a global warming catastrophe. In the latter case, manmade global warming might be barely measurable (and previous warming would be mostly the result of some natural cause). All climate models tracked by the IPCC now have positive cloud feedbacks, by varying amounts, which partly explains why the IPCC expects anthropogenic global warming to be so strong.

Obviously, we need to know what feedbacks operate in the climate system.

ESTIMATING FEEDBACKS: AN UNSOLVED PROBLEM

I am now quite convinced that most, if not all, previous estimates of feedback from our satellite observations of natural climate variability are in error. Furthermore, this error is usually in the direction of positive feedback, which will then give the illusion of a ‘sensitive’ climate system. More on that later.

The goal seems simple enough: to measure cloud feedbacks, we need to determine how much clouds change in response to a temperature change. But most researchers do not realize that this is not possible without accounting for causation in the opposite direction, i.e., the extent to which temperature changes are a response to cloud changes.

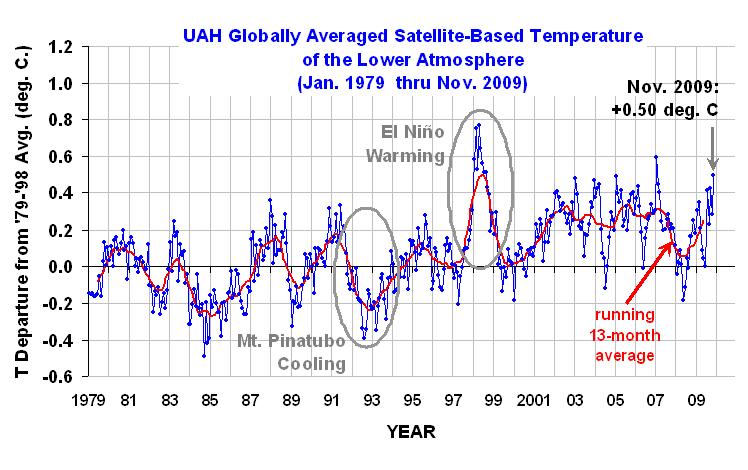

As I will demonstrate in my AGU talk on December 16, for all practical purposes it is not possible (at least not yet) to measure cloud feedbacks because the two directions of causation are intermingled in nature. As a result, it is not possible with current methods to measure feedbacks in response to a radiative forcing event such as a change in cloud cover, or even a major volcanic eruption, such as that from the 1991 eruption of Mt. Pinatubo.

The reason is that the size of the radiative forcing of a temperature change overwhelms the size of the radiative feedback upon that temperature change, and our satellite measurements can not tell the difference. There are only two special situations where it can be done: (1) the theoretical case of an instantaneously imposed, and then constant amount of radiative forcing…which never happens in the real world; and (2) the real world case where temperature changes are caused non-radiatively. While I will not go into the evidence here, satellite observations suggest that cloud feedbacks in the latter case are strongly negative.

Now, if you have an accurate estimate of the radiative forcing of temperature change, accurate estimates of radiative feedback can be made. But we do not have good estimates of this forcing during natural climate variations. Only in climate model simulations where a known amount of radiative forcing is imposed upon the model can this be done. (In another method, if you try to estimate feedback by measuring how fast the ocean responds, you also run into problems because your answer depends upon how fast and how deep in the ocean you assume the temperature change will extend.)

EXAMPLE 1: FEEDBACKS FROM THE CHANGE IN SEASONS

Once one realizes that clouds causing a temperature change (forcing) corrupts our estimates of temperature causing a cloud change (feedback), it becomes apparent that many of the previous attempts to estimate feedback will not work.

For instance, many researchers think that you can estimate feedbacks from the seasonal cycle in average solar illumination of the Earth and the resulting temperature response. There is about a 7% peak-to-peak variation in the amount of solar energy reaching the Earth during the year, with a maximum occurring in March and September, and the minimum in June. So, one would think we could measure by how much this change in solar heating causes a change in temperature.

The trouble is that global circulation patterns also change dramatically with the seasons, mostly due to the large difference in land masses between the Northern and Southern Hemispheres. Since cloud formation is affected by a variety of circulation induced effects (fronts, temperature inversions, etc.), the cloud cover and thus the natural shading of the Earth by clouds also changes with the seasons, through these seasonal circulation changes.

These non-temperature effects on cloud cover will confound the estimation of feedbacks, because their magnitude is considerably larger than the magnitude of the feedbacks. If the Earth was 100% covered by ocean that had a constant depth everywhere, then it might be possible to estimate feedbacks in this way…but not in the real world.

EXAMPLE 2: FEEDBACKS FROM EL NINO & LA NINA

Researchers have also made feedback estimates from the anomalously warm conditions that exist during El Nino, and the cool conditions during La Nina. But this runs into a similar problem as estimating feedbacks from the change in seasons: there are substantial variations in global circulation patterns between El Nino and La Nina, especially in the tropics. These circulation changes can induce cloud changes – wholly apart from temperature-induced changes – and there is no known way to separate the circulation-induced cloud changes (forcing) from the feedback-induced changes.

THE ERRORS WHICH RESULT FROM PREVIOUS FEEDBACK ESTIMATES

So, how do these problems impact our estimates of feedback? Except under certain circumstances, they will always cause a bias toward positive feedback. The reason is that radiative forcing and radiative feedback always work in opposition to each other. (Here I am speaking of the net feedback parameter, which also contains the increase in loss of infrared radiation by the Earth in direct response to warming).

Since our satellites measure the two effects combined, if you assume only feedback is being measured when both feedback and forcing are occurring, then you will underestimate the feedback parameter, which is a bias in the direction of positive feedback.

THE IMPACT ON CLIMATE MODEL VALIDATION

I can predict that the climate modelers will claim that we really do not need to know the direction of causation…we can just measure the temperature/cloud relationships in nature, and then adjust the models until they produce the same temperature/cloud relationships.

While this might sound reasonable, it turns out that the radiative signature of forcing is much larger than that of feedback. As a result, one can get pretty good agreement between models and observations even when the model feedbacks are greatly in error. Another way of saying this is that you can get good agreement between the model behavior and observations whether the cloud feedbacks are positive OR negative. This is another fact I will be demonstrating on December 16.

WHERE DO WE GO FROM HERE?

My first task is to convince both observationalists and modelers that much of what they previously believed about atmospheric feedbacks operating in the real world can be tossed out the window. Obviously, this will be no small task when so many climate experts assume that nothing important could have been overlooked after 20 years and billions of dollars of climate research.

But even if I can get a number of mainstream climate scientists to understand that we still do not know whether cloud feedbacks are positive or negative, it is not obvious how to fix the problem. As I suggested a couple of blog postings ago, maybe we should quit trying to test whether a climate model that produces 3 deg. C of warming in response to a doubling of carbon dioxide is “true”, and instead test to see if we can falsify a climate model which only produces 0.5 deg. C of warming. As someone recently pointed out in an email to me, a climate model IS a hypothesis, and in science a hypothesis can only be falsified — not proved true.

From what I have seen from my analysis of output from 18 of the IPCC’s climate models, I’ll bet that we can not falsify such a model with our current observations of the climate system. I suspect that the climate modeling groups have only publicized models that produce the amount of warming they believe “looks about right”, or “looks reasonable”. Through group-think (or maybe the political leanings of, and pressure from, the IPCC leadership?), they might well have tossed out any model experiments which produced very little warming.

In any event, I believe that the scientific community’s confidence that climate change is now mostly human-caused is seriously misplaced. It is time for an independent review of climate modeling, with experts from other physical (and even engineering) disciplines where computer models are widely used. The importance of the issue demands nothing less.

Furthermore, the computer codes for the climate models now being used by the IPCC should be made available to other researchers for independent testing and experimentation. The Data Quality Act for U.S.-supported models already requires this, but this law is being largely ignored.

As a (simple) modeler and computer programmer myself, I know that the modeling groups will protest that the models are far too complex and finely tuned to let amateurs play with them. But that’s part of the problem. If the models are that complex and fragile, should we be basing multi-trillion dollar policy decisions on them?

Home/Blog

Home/Blog