What is the atmospheric greenhouse effect? It is the warming of the surface and lower atmosphere caused by downward infrared emission by the atmosphere, primarily from water vapor, carbon dioxide, and clouds.

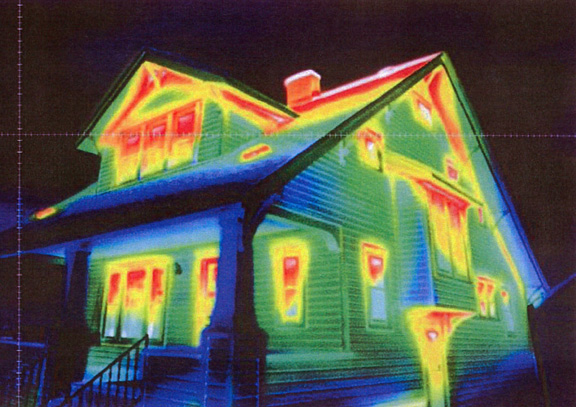

Greenhouse gases and clouds cause the lower atmosphere to be warmer, and the upper atmosphere to be cooler, than if they did not exist…just as thermal insulation in a house causes the inside of a heated house to be warmer and the outside of the house to be cooler than if the insulation was not there. While the greenhouse effect involves energy transfer by infrared radiation, and insulation involves conduction, the thermodynamic principle is the same.

Without greenhouse gases, the atmosphere would be unable to cool itself in response to solar heating. But because an IR emitter is also an IR absorber, a greenhouse atmosphere results in warmer lower layers — and cooler upper layers — than if those greenhouse gases were not present.

As discussed by Lindzen (1990, “Some Coolness Concerning Global Warming”), most of the surface warming from the greenhouse effect is “short-circuited” by evaporation and convective heat transfer to the middle and upper troposphere. Nevertheless, the surface is still warmer than if the greenhouse effect did not exist: the Earth’s surface emits an average of around 390 W/m2 in the thermal infrared even though the Earth absorbs only 240 W/m2 of solar energy.

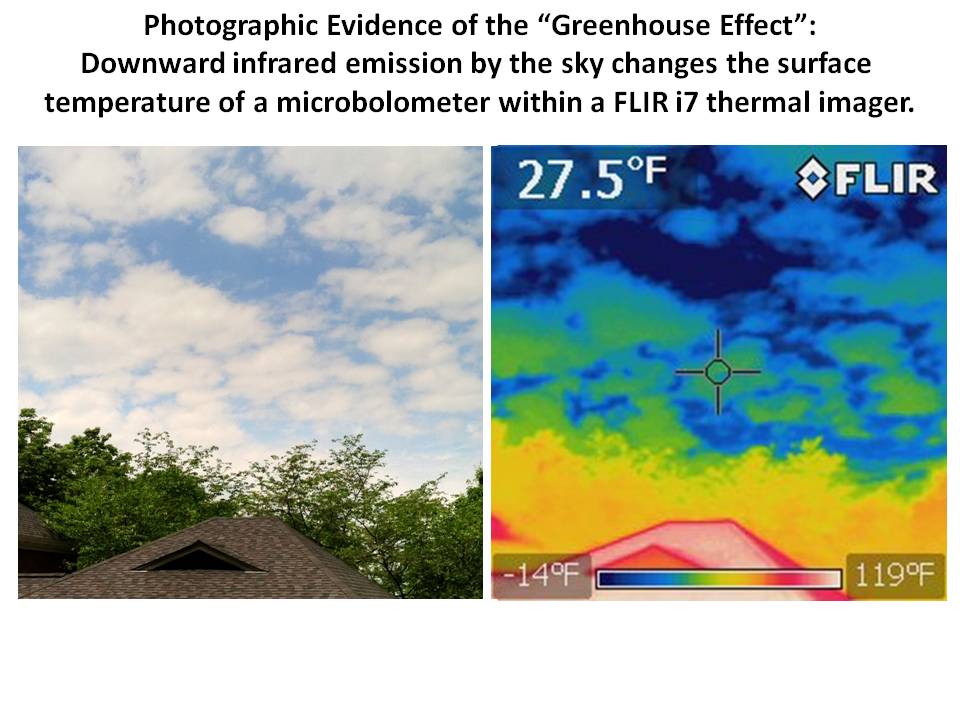

I have demonstrated before how you can directly measure the greenhouse effect with a handheld IR thermometer pointed at the sky. I say “directly measure” because an IR thermometer pointed at the sky measures the temperature change of a thermistor exposed to varying levels of IR radiation, just as the temperature of the Earth’s surface changes in response to varying levels of downwelling IR radiation.

I recently purchased a FLIR i7 thermal imager, which instead of measuring just one average temperature, uses a microbolometer sensor array (140 x 140 pixels) to make 19,600 temperature readings in an image format. These are amazing little devices, originally developed for military applications such as night vision, and are very sensitive to small temperature differences, around 0.1 deg. C.

Because these handheld devices are meant to measure the temperature of objects, they are tuned to IR frequencies where atmospheric absorption/emission is minimized. The FLIR i7 is sensitive to the broadband IR emission from about 7.5 to 13 microns. While the atmosphere in this spectral region is relatively transparent, it also includes some absorption from water vapor, CO2, oxygen, and ozone. The amount of atmospheric emission will be negligible when viewing objects only a few feet away, but is not negligible when pointed up at miles of atmosphere.

Everything around us has constantly changing temperatures in response to various mechanisms of energy gain and loss, things that are normally invisible to us, and these thermal imagers give us eyes to view this unseen world. I’ve spent a few days getting used to the i7, which has a very intuitive user interface. I’ve already used it to identify various features in the walls of my house; see which of my circuit breakers are carrying heavy loads; find a water leak in my wife’s car interior; and see how rain water flows on my too-flat back patio.

The above pair of images shows how clouds and clear sky appear. While the FLIR i7 is designed to not register temperatures below -40 deg. F/C (and is only calibrated to -4 deg. F) you can see that sky brightness temperatures well above this are measured (click the above image for full-size version).

This is direct evidence of the greenhouse effect: the surface temperature of the microbolometer within the thermal imager is being affected by downwelling IR radiation from the sky and clouds, which is exactly what the greenhouse effect is. If there was no downward emission, the sky temperature as measured by a perfectly designed thermal imager would read close to absolute zero (-460 deg. F), that is, it would measure the cosmic background radiation if the atmosphere was totally transparent to IR radiation.

Just so there is no confusion: I am not talking about why the indicated temperatures are what they are…I am talking about the fact that the surface temperature of the microbolometer is being changed by IR emission from the sky. THAT IS the greenhouse effect.

Home/Blog

Home/Blog