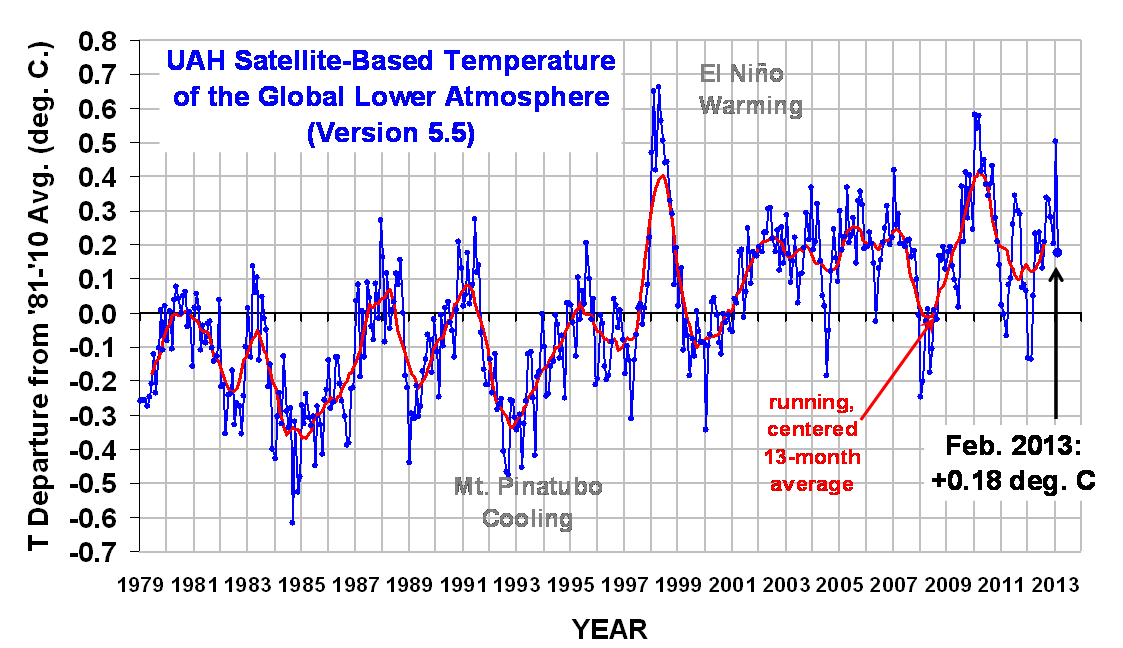

Following up on yesterday’s post, I’d like to address the more general question of tropical sea surface temperatures since 1998. Why haven’t they warmed? Of course, much has been made by some people about the fact that even global average temperatures have not warmed significantly since the 1997/98 El Nino event.

Using the Tropical Rain Measuring Mission (TRMM) Microwave Imager (TMI) SSTs available from Remote Sensing Systems (all 15 GB worth), here I will statistically adjust tropical SSTs for El Nino and La Nina activity, and see how the resulting trend since 1998 compares to the latest crop of IPCC CMIP5 model runs. We will restrict the analysis to 20oN to 20oS latitude band, which is the usual latitudinal definition of “tropical”.

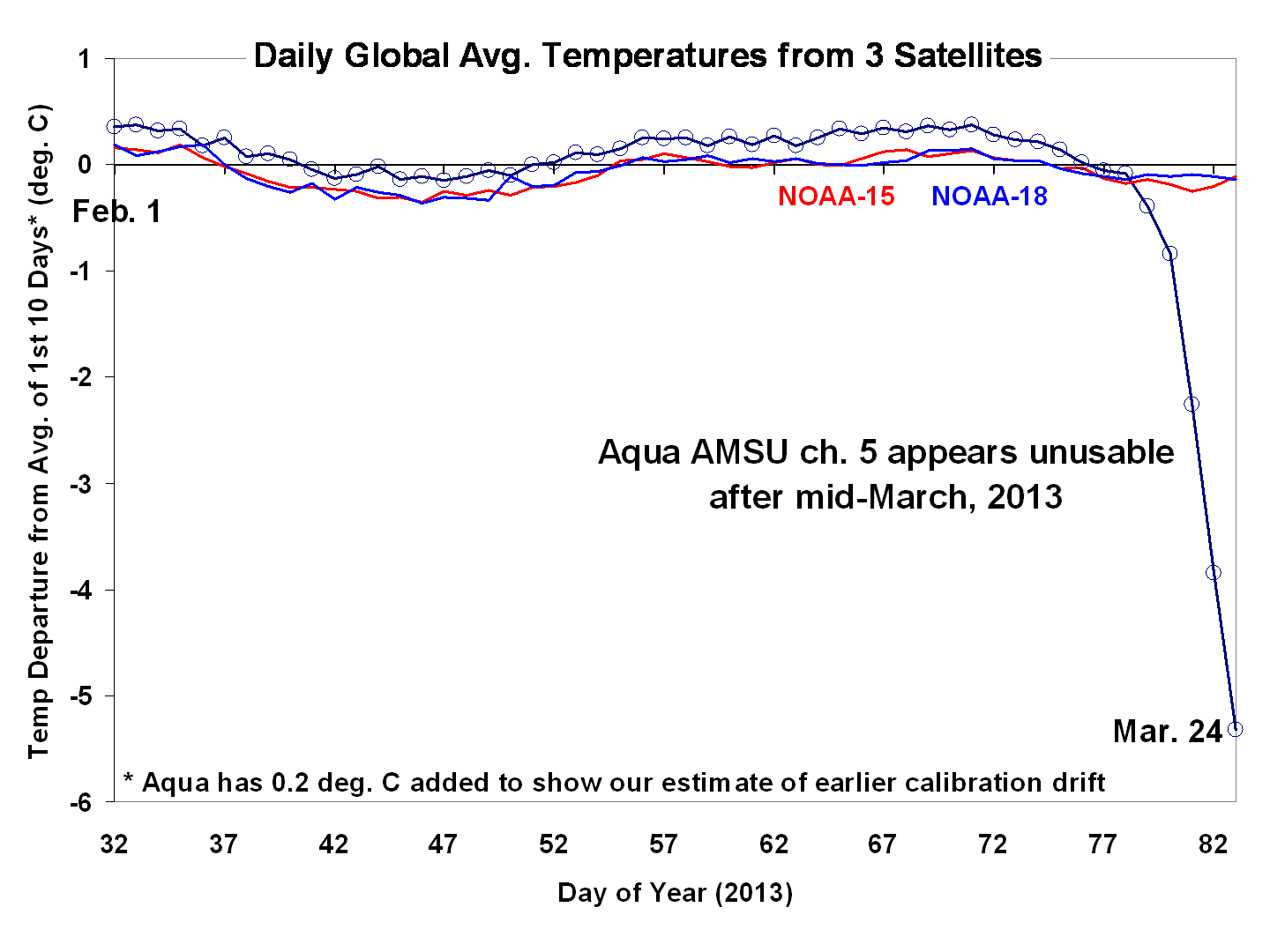

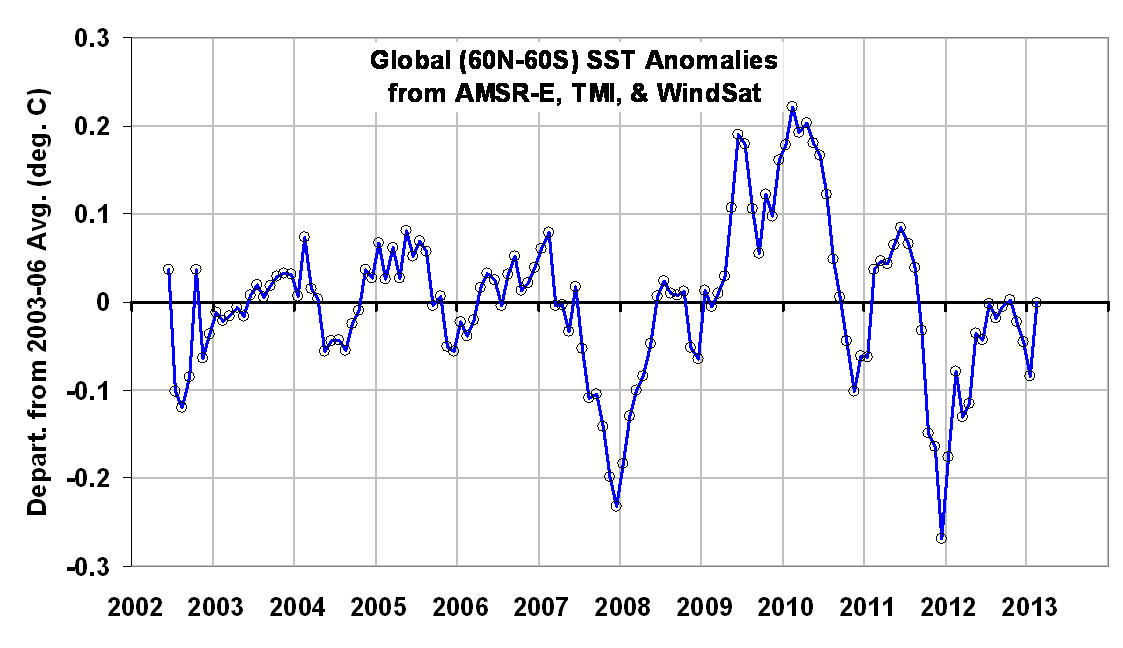

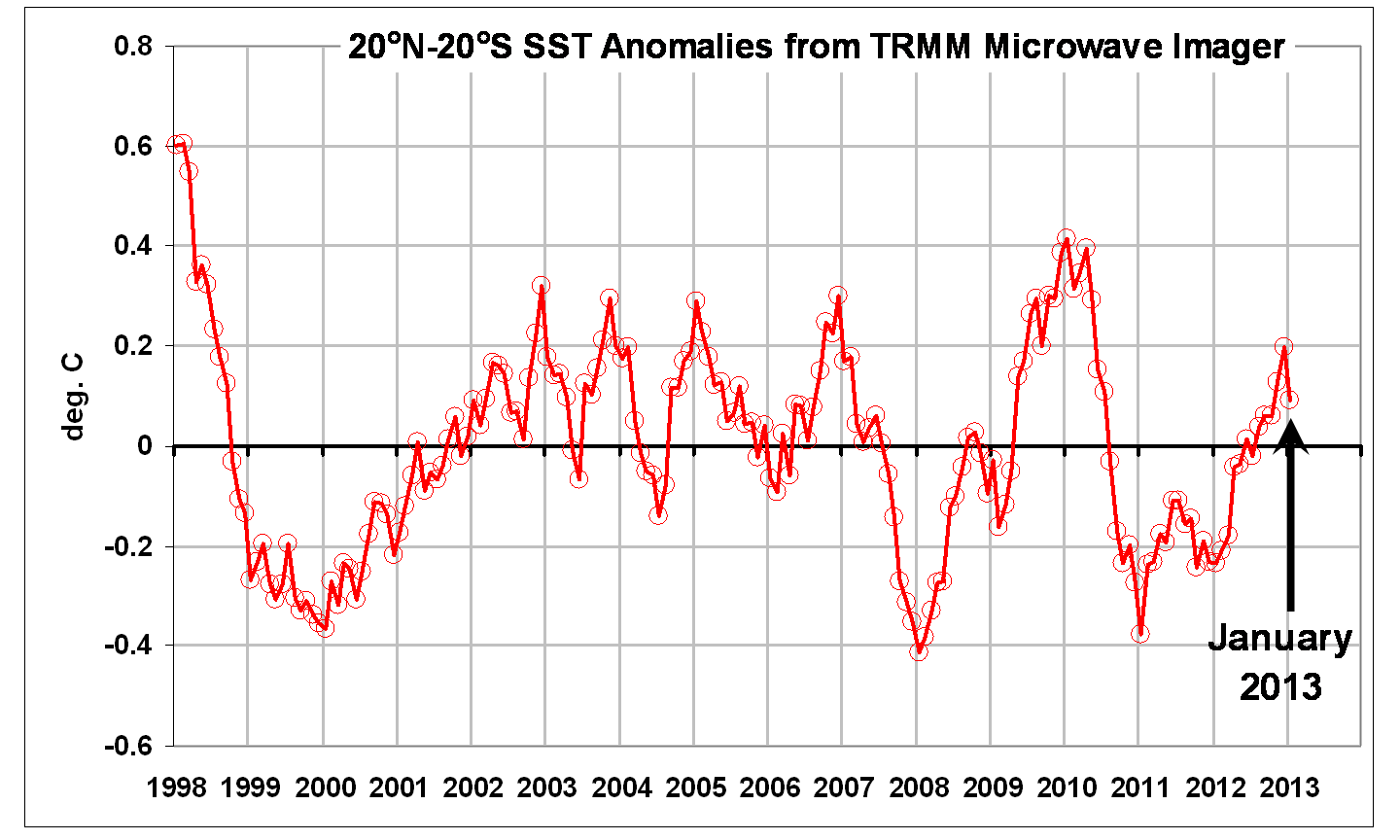

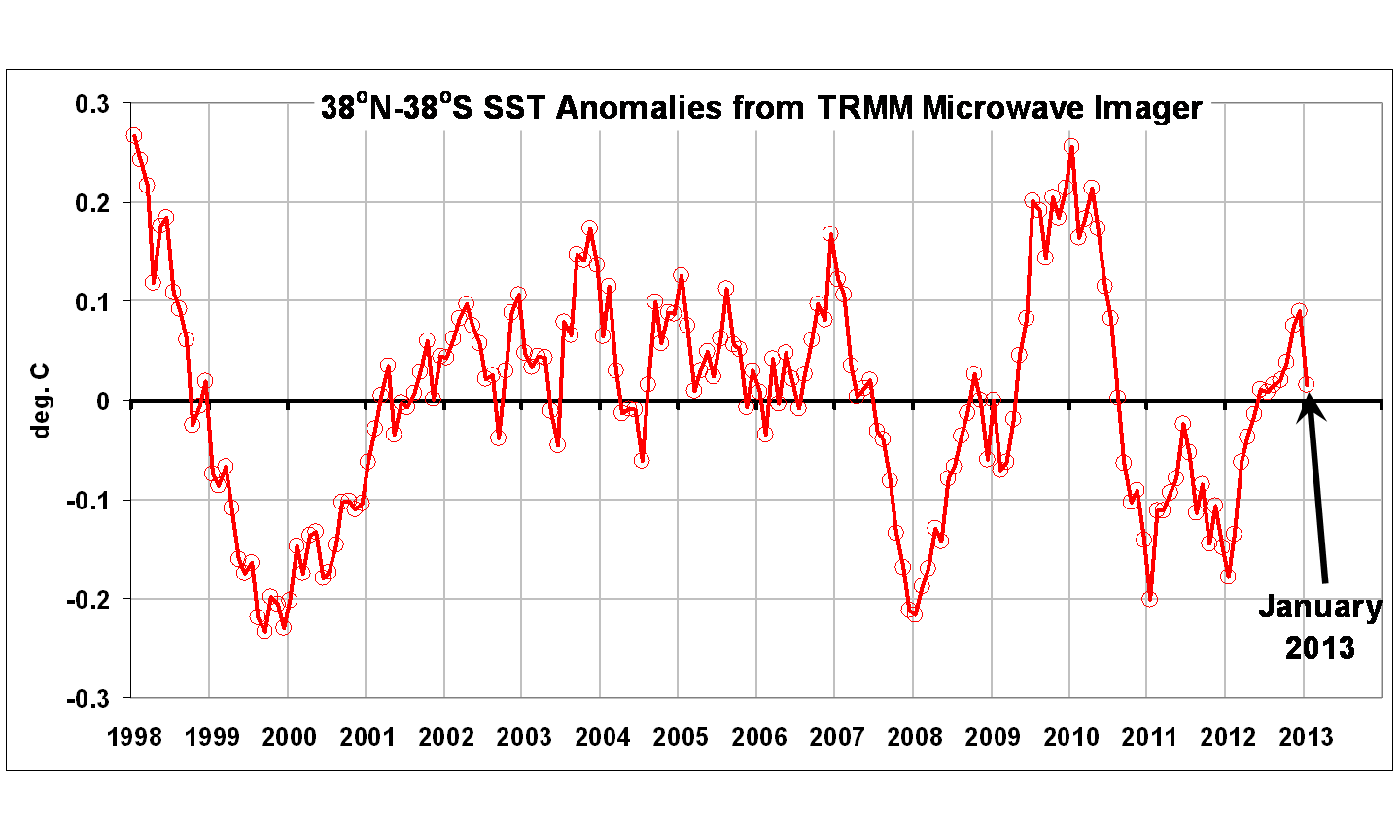

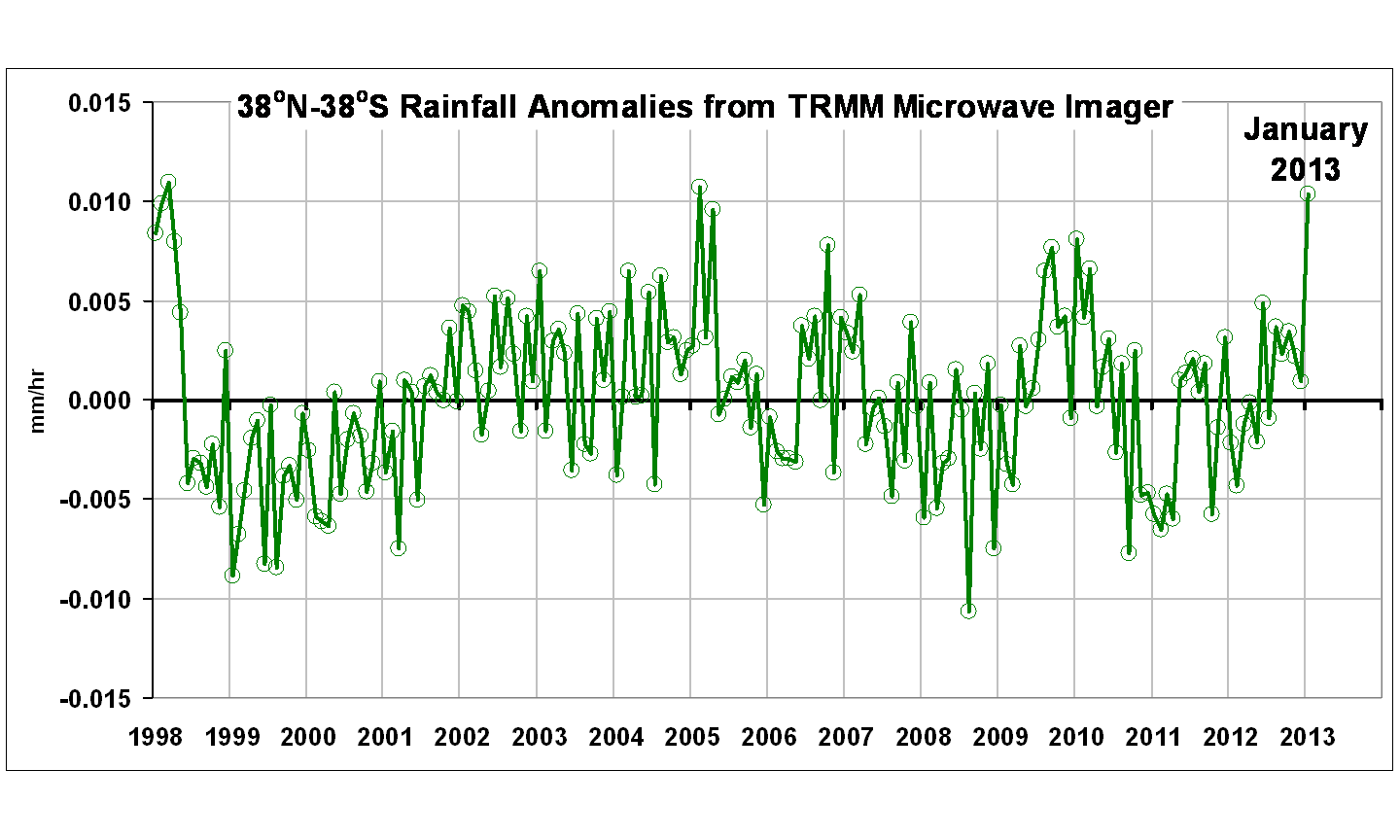

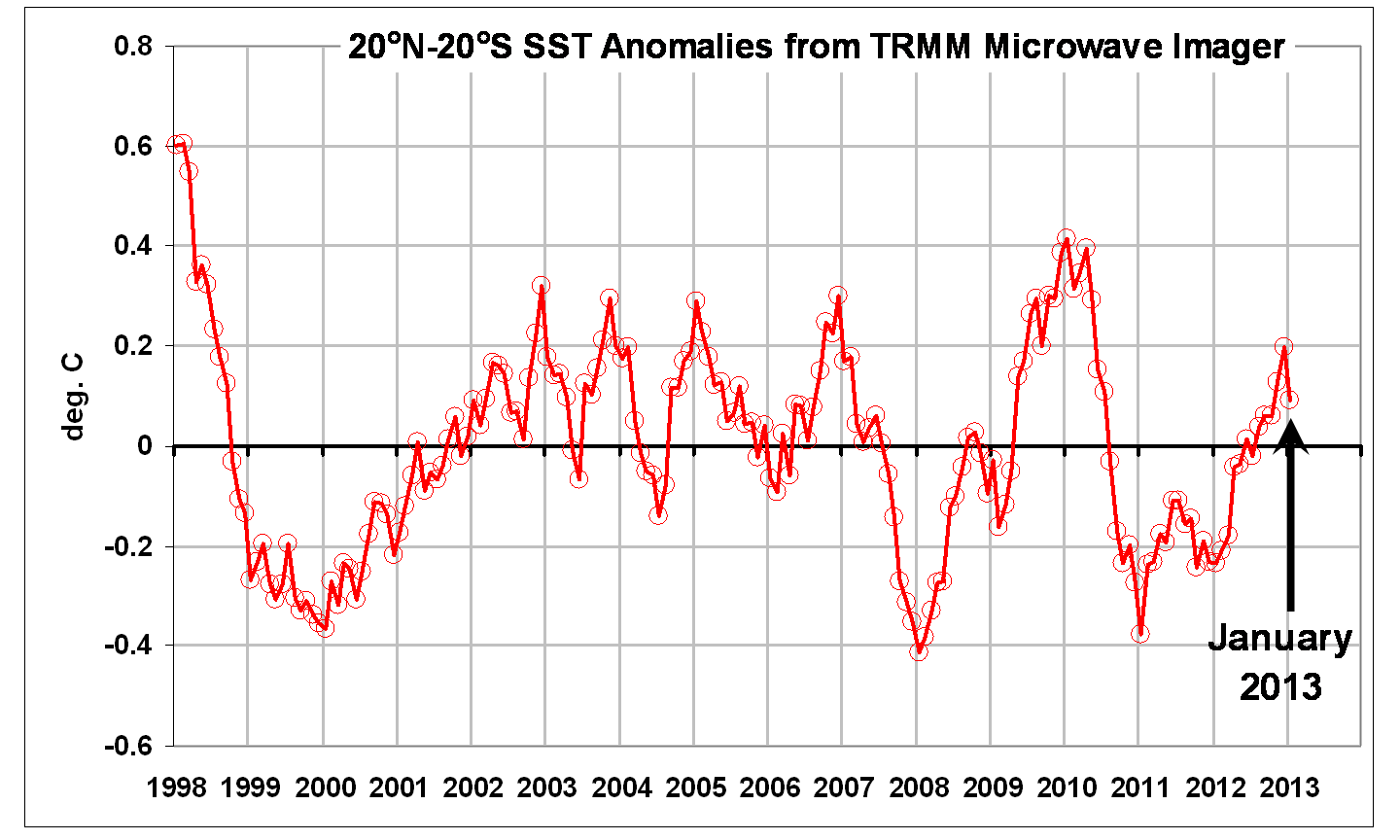

The resulting TRMM TMI SST anomalies since January 1998 through last month look like this:

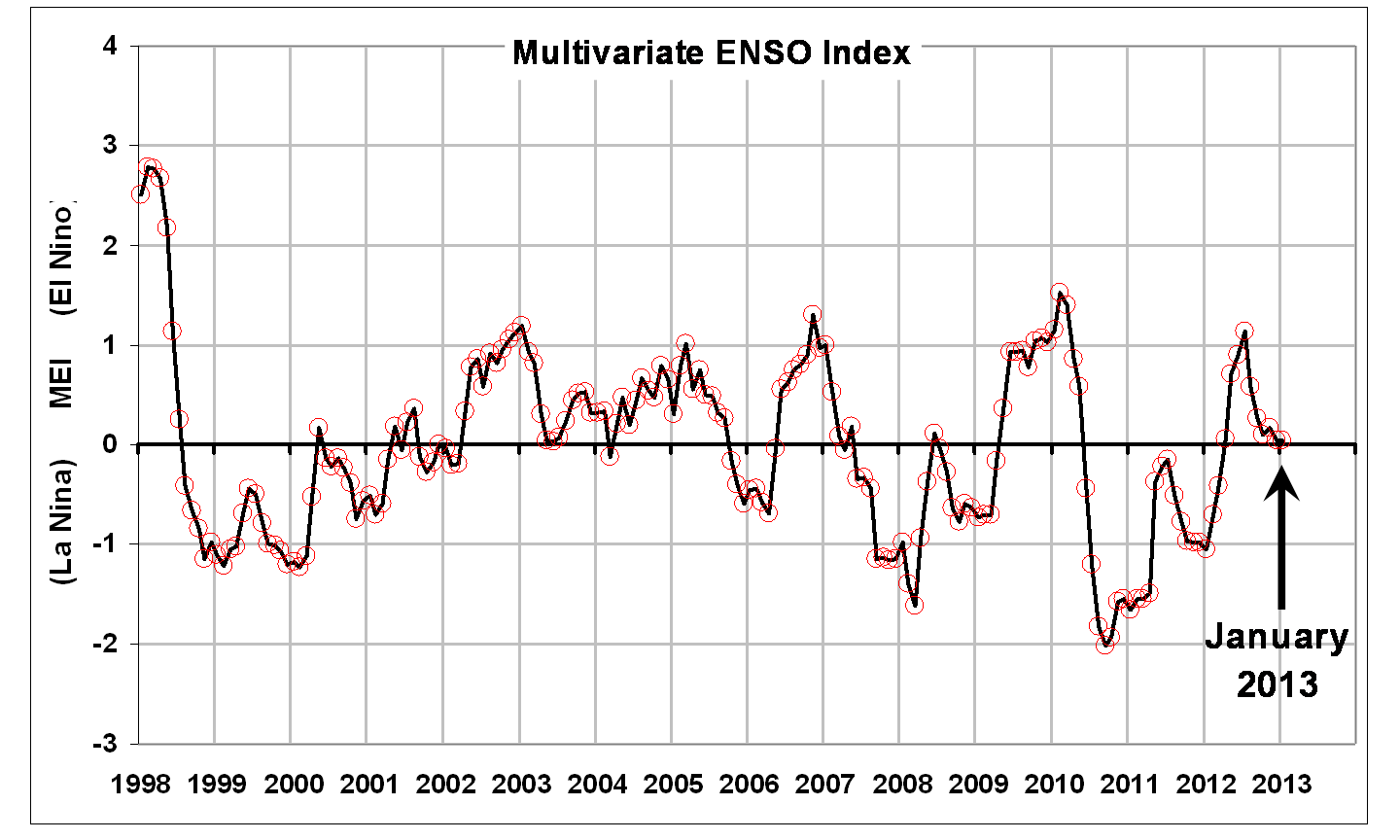

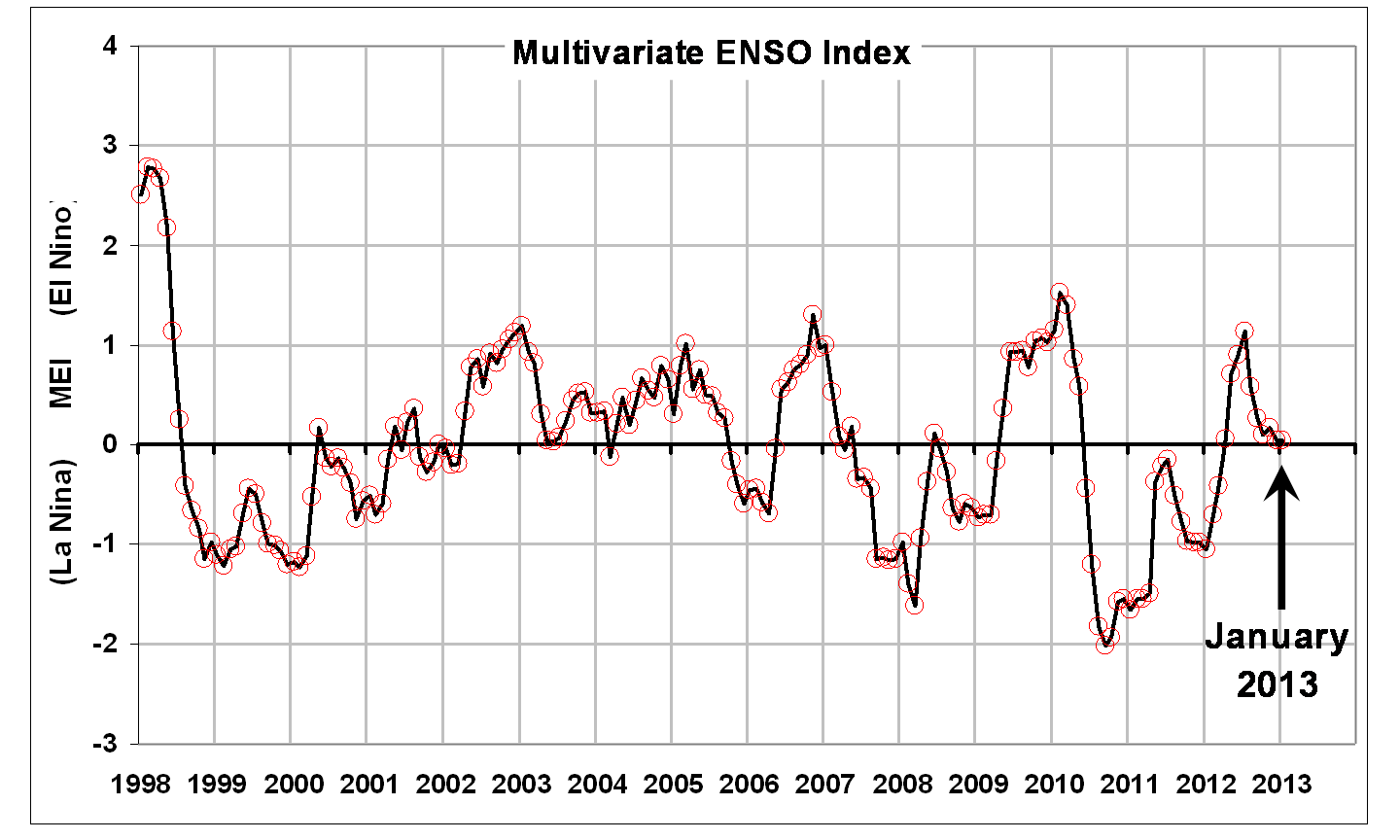

The up and down variations are clearly related to El Nino and La Nina activity, as evidenced by this plot of the Multivariate ENSO Index (MEI):

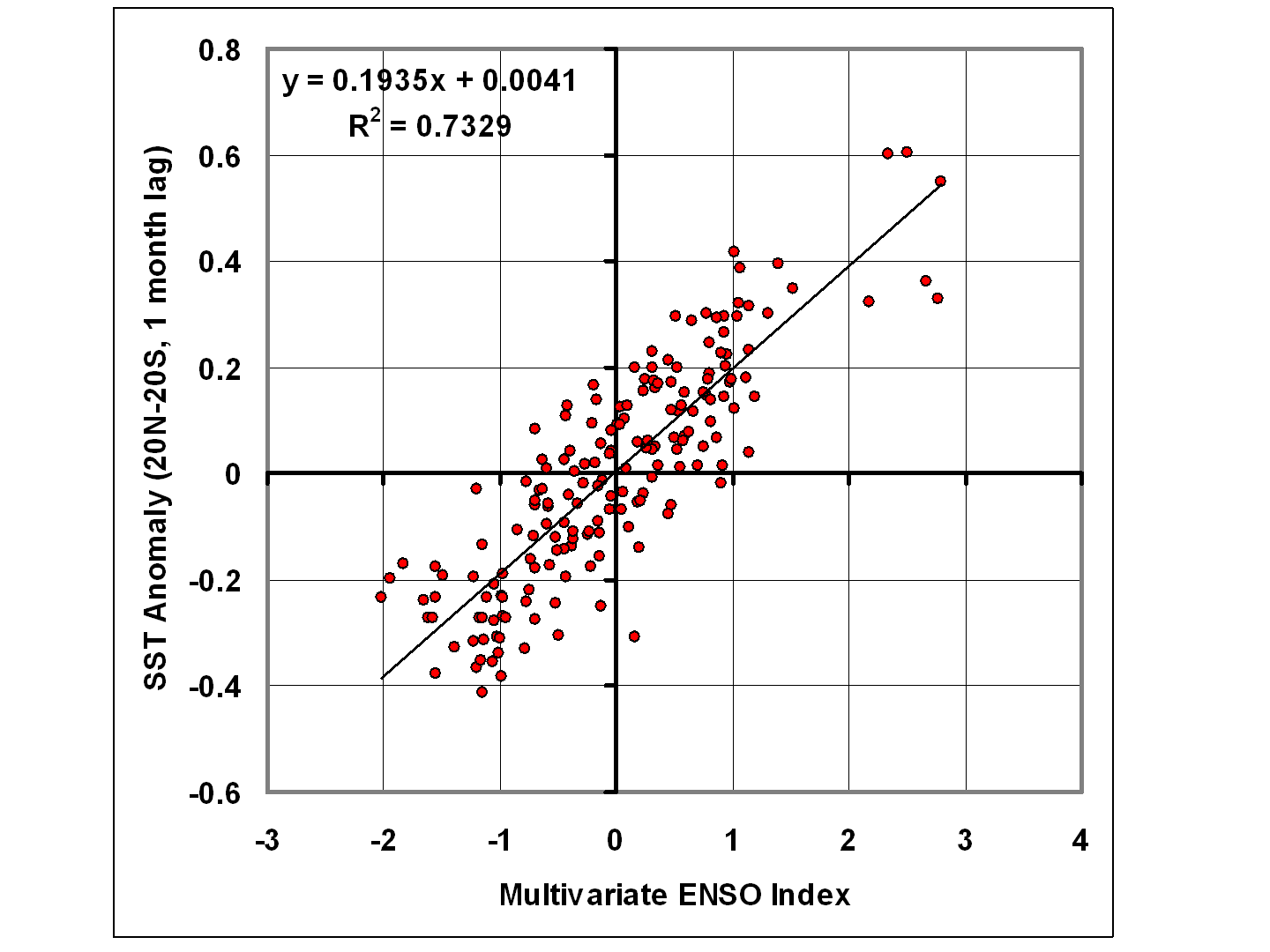

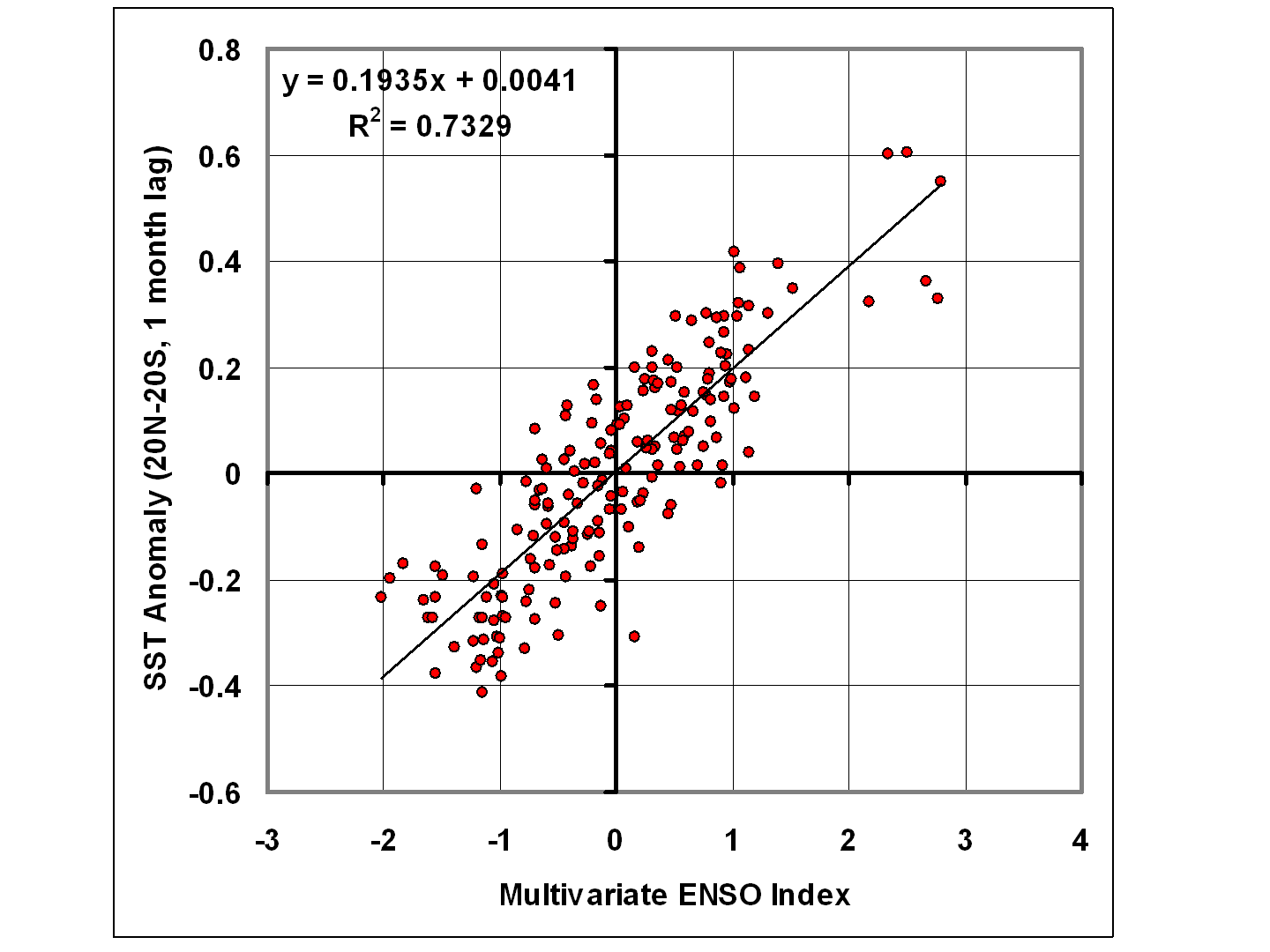

We can then plot these SST and MEI data against each other…

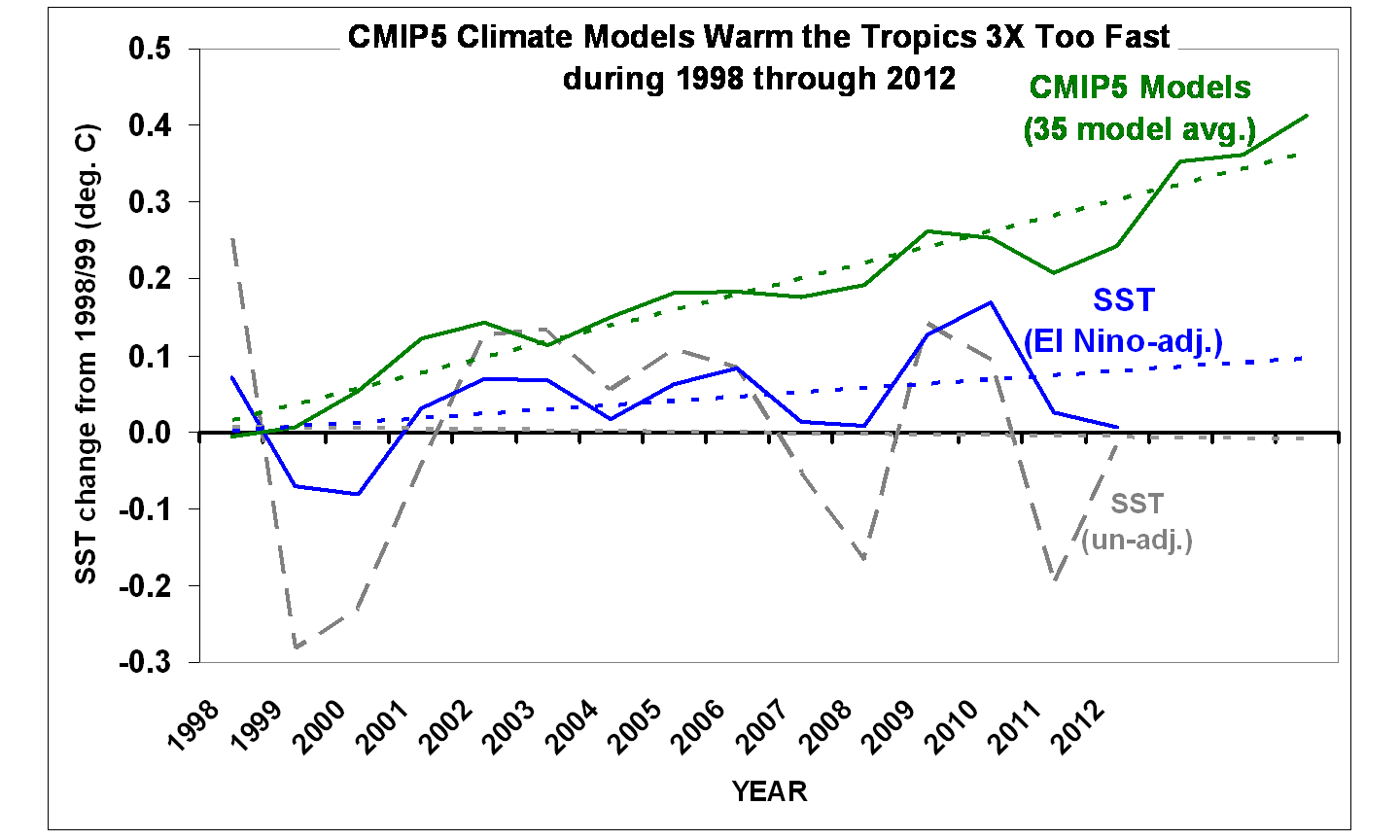

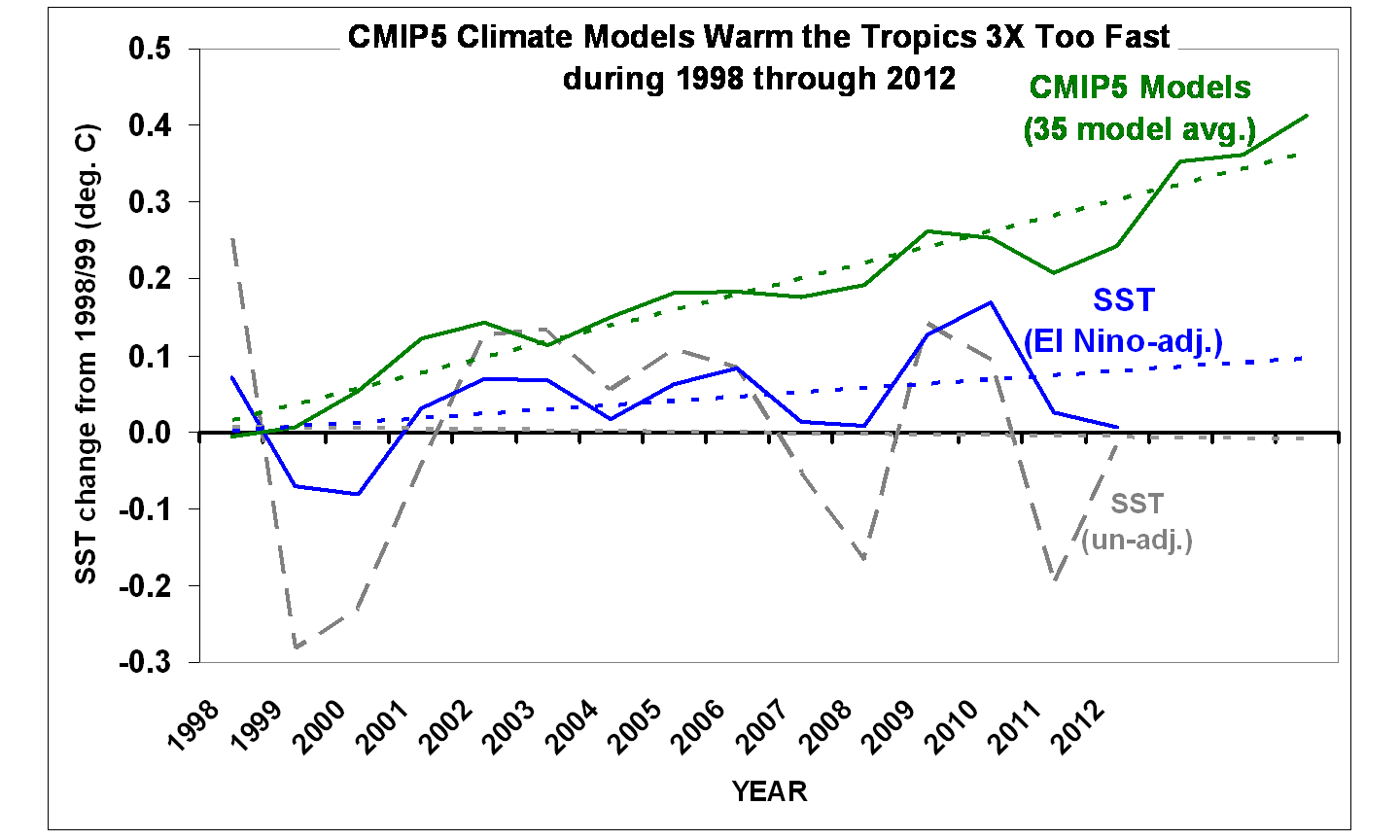

…and use this statistical relationship to estimate SST from MEI, and then subtract that from the original SST data to get an estimate (however crude) of how the SSTs might have behaved without the presence of El Nino and La Nina activity (the blue line):

Note that I have now averaged the monthly data to yearly, and this last plot also shows an average of 35 CMIP5 climate models SSTs during 1998-2012 for the same (tropical) latitude band, courtesy of John Christy and the KNMI climate explorer website. Also note I have plotted all three time series as departures from their respective 1998/99 2-year average.

The decadal linear temperature trends are:

un-adj. SST: = -0.010 C/decade

MEI-adj. SST: +0.056 C/decade

CMIP5 SST: +0.172 C/decade

So, even after adjusting for El Nino and La Nina activity, the last 15 years in the tropics have seen (adjusted) warming at only 1/3 the rate which the CMIP5 models create when they are forced with anthropogenic greenhouse gases.

Now, one might object that you really can’t adjust SSTs by subtracting out an ENSO component. OK, then, don’t adjust them. Since the observed SST warming without adjustments is essentially zero, then the models warm infinitely faster than the observations. There. 😉

Why Have the Models Warmed Too Fast?

My personal opinion is that the models have cloud feedbacks (and maybe other feedbacks) wrong, and that the real climate system is simply not as sensitive to increasing CO2 as the modelers have programmed the models to be.

But there are other possibilities, all theoretical:

1) Ocean mixing: a recent increase in ocean vertical mixing would cause the surface to warm more slowly than expected, and the cold, deep ocean to very slowly warm. But it is debatable whether the ARGO float deep-ocean temperature data are sufficiently accurate to monitor deep ocean warming to the levels we are talking about (hundredths of a degree).

2) Increasing atmospheric aerosols: This has been the modelers’ traditional favorite fudge factor to make climate models keep from warming at an unrealistic rate…a manmade aerosol cooling effect “must be” cancelling out the manmade CO2 warming effect. Possible? I suppose. But blaming a LACK of warming on humans seems a little bizarre. The simpler explanation is feedbacks: the climate system simply doesn’t care that much if we put aerosols *OR* CO2 in the atmosphere.

3) Increasing CO2 doesn’t cause a radiative warming influence (radiative forcing) of the surface and lower atmosphere.

I’m only including that last one because, in science, just about anything is possible. But my current opinion is that the science on radiative forcing by increasing CO2 is pretty sound. The big uncertainty is how the system responds (feedbacks).

Home/Blog

Home/Blog