…and Why Dessler, Trenberth, and the IPCC are Wrong

After the resignation of the Editor-in-Chief at Remote Sensing over the publication of our paper in that journal, I thought it would be good to summarize as simply as I can what the controversy is all about. [I am also including Trenberth in this discussion because there is a misperception that the paper by Trenberth et al. (2010), which only dealt with the tropics, was ignored in our analysis. Believe it or not, it’s quite common to ignore previous papers that are not relevant to your own paper. 🙂 Also, Trenberth sat next to me during congressional testimony where he confidently asserted (as I recall) “clouds don’t cause climate change”.]

Are Clouds Capable of Causing Temperature Changes?

At the heart of this debate is whether cloud changes, through their ability to alter how much sunlight is allowed in to warm the Earth, can cause temperature change.

We claim they can, and have demonstrated so with both phase space plots of observed temperature versus Earth radiative budget variations here, and with lag-regression plots of the same data here, and with a forcing-feedback model of the average climate system in both of those publications. (The model we used was suggested to us by Isaac Held, Princeton-GFDL, who is hardly a global warming “skeptic”.)

The Dessler and Trenberth contrary view – as near as I can tell – is that clouds cannot cause temperature change, unless those cloud changes were themselves caused by some previous temperature change. In other words, they believe cloud changes can always be traced to some prior temperature change. This temperature-forcing-clouds direction of causation is “cloud feedback”.

Put more simply, Dessler and Trenberth believe causation between temperature and clouds only flows in one direction :

Temperature Change => Cloud Change,

whereas we and others believe (and have demonstrated) it flows in both directions,

Temperature Change <= => Cloud Change.

Why is this Important?

Because it affects our ability to find the Holy Grail of climate research: cloud feedback. Even the IPCC admits the biggest uncertainty in how much human-caused climate change we will see is the degree to which cloud feedback [temperature change => cloud change] will magnify (or reduce) the weak direct warming tendency from more CO2 in the atmosphere.

The IPCC claim is that clouds will change in response to warming in ways which magnify that warming (positive cloud feedback), but by an unknown amount. All of the 20+ climate models tracked by the IPCC exhibit from weak to strongly positive cloud feedbacks.

But we claim (and have demonstrated) that causation in the opposite direction [cloud change => temperature change] gives the illusion of positive cloud feedback, even if negative cloud feedback really exists. Thus, any attempt to estimate feedback in the real climate system must also address this source of “contamination” of the feedback signal.

It would be difficult for me to overstate the importance of this issue to global warming theory. Sufficiently positive cloud feedback could cause a global warming Armageddon. Sufficiently negative cloud feedback could more than cancel out any other positive feedbacks in the climate system, and relegate manmade global warming to the realm of just an academic curiosity.

So, How Can We Know the Difference in these Two Directions of Causation?

There is one big difference between clouds-causing-temperature change (our view of what happens), and temperature-causing-cloud change (which is cloud feedback).

Cloud feedback happens rapidly, in a matter of days to a few weeks at the very most, due to the rapidity with which the atmosphere adjusts to a surface temperature change. It this paper, we even showed evidence that the peak net radiative feedback (from clouds + temperature + water vapor] occurs within a couple of days of peak temperature.

I have more extensive evidence now that the lag is closer to zero days.

In contrast, causation in the opposite direction (clouds forcing temperature change) involves a time lag of many months, due to the time it takes for the immense thermal inertia of the ocean to allow a temperature response to a change in absorbed sunlight.

As mentioned above, the large time lag involved in clouds-causing-temperature change can be demonstrated with either lag regression, or phase space plots of the data. There is no other explanation for this behavior we have published.

We even see this behavior in the IPCC climate models themselves….every one of them.

But Why Does it Even Matter Which Direction the Causation Takes?

What we have shown repeatedly is that if there are clouds-forcing-temperature present, this will always decorrelate the data (because of the inherent time lag involved between a cloud change and the ocean temperature response), which then confounds the estimation of feedback in statistical comparisons of the two kinds of data.

The existence of very low statistical correlation coefficients in all of the previous studies attempting to diagnose feedback in the traditional manner is, by itself, evidence of this effect. For example, the data Dessler analyzed had a correlation coefficient of about 0.1 (as far as I can tell, anyway…for some reason he chose not to list this very basic statistic in his paper. Why did the peer reviewers not catch such an obvious omission?).

But Couldn’t the Cloud Changes Have been Produced by Some previous Temperature Change?

This is a question I hear repeatedly. I will address it in 2 ways.

First, I believe the simple answer is “no”, because temperature-causing-cloud changes (cloud feedback) occurs very rapidly, with little time lag. This is because the atmosphere responds rapidly to a surface temperature change, in a matter of days to weeks at the most.

Secondly, just for the sake of argument, let’s assume our critics are right, and there IS a substantial time lag in the cloud feedback response to a temperature change. As I have challenged Dessler to do, if he really believes that is happening, then he should do LAGGED regression to estimate feedback…that is, adjust for the time lag in his regression analysis.

And when he does that, his weak positive cloud feedback diagnosis will suddenly turn into a negative feedback diagnosis. I’ve done it, and it is what Lindzen and Choi did in their recently published paper, which resulted in a diagnosis of strongly negative feedback.

We will see when Dessler’s new paper appears, reportedly being published this coming week in GRL, whether he will include time lags in his analysis.

But What Else Could Cause Clouds to Change, Besides Temperature?

Any “expert” who asks such a naive question obviously has little training in meteorology. Unfortunately, this is indeed the case for many climate scientists.

Cloud formation is influenced by countless processes…the presence of cloud condensation nuclei, the temperature lapse rate and temperature inversions, wind shear, the presence of fronts, changes in ocean upwelling, to name a few.

The climate system is a non-linear dynamical system, and it is constantly changing. Chaos is not just a short term phenomenon affecting weather. I think that long-time scale quasi-chaotic changes in ocean circulation, like that associated with the Pacific Decadal Oscillation, are capable of causing climate change. The great climate shift of 1977 is evidence of that.

Even the IPCC and the climate modelers know that the huge reflective regions of marine stratocumulus over the eastern ocean basins have a dramatic effect on climate, and so any changes in upwelling of cool water in these regions can then indirectly cause global warming or cooling.

Of course, there is also the Svensmark et al. theory of cosmic ray indirect forcing cloud cover, and I suspect there are effects on cloud formation we have not even discovered yet.

Just because we do not understand these things well enough to put them in a climate model does not mean they don’t exist.

What it All Means

This cloud issue has become very contentious because, if we (or those working on the cosmic ray effect on clouds) are correct, it means Mother Nature is perfectly capable of causing her own climate change.

And this possibility cannot be permitted by the IPCC, because it then begs the question of whether climate change — both past and future — is more natural than anthropogenic. What is particularly discouraging is that the vast majority of scientists contacted by reporters to comment on our paper clearly had not even read the paper. They just repeated what other scientists had said. And I doubt even those original scientists read it. All they know is that it dissed the climate models, and so it must be wrong.

[We have even had papers rejected by peer reviewers who we KNOW didn’t read the paper. They objected to “claims” we never even made in our paper. This is the sad state of peer review when a scientific discipline is so politicized.]

Unfortunately, the cloud feedback holy grail, for as important as it is to knowing how much impact humans have on the climate system, still cannot be reliably diagnosed from our observations of the climate system. We have shown clear evidence here and here that the dominant influence in the satellite observations and in the models is clouds-causing-temperature change. And we have shown theoretically that in such a situation, one cannot diagnose a feedback — it is lost in the noise.

And if you try to diagnose feedback from satellite data like Dessler has, it will usually give the illusion of positive feedback — even if negative feedback is present.

Any agreement between models and observations found by studies like Dessler’s in such statistics probably just means they have similar levels of cloud-causing-temperature change, not similar feedbacks.

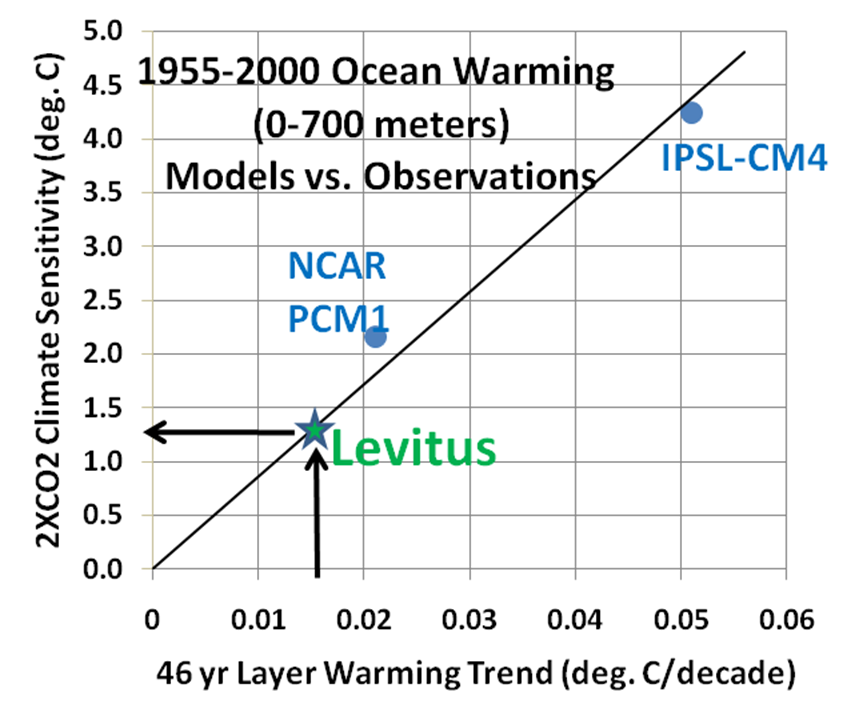

At the end of the day, the dirty little secret is that there is still no way to test the IPCC climate models for their feedback behavior, which means there is no way to know which (if any of them) is even close to being correct in its predictions for the future.

The very fact that the 20+ climate models the IPCC tracks still span just as wide a range of feedbacks as climate models did 20 years ago is evidence by itself that the climate community still can’t demonstrate what the real cloud feedbacks in the climate system are. Otherwise, they would tune their models accordingly.

The disconcerting conclusion is that global warming-related policy decisions are being guided by models which still have no way to be tested in their long-term predictions.

Finally, the fact that the media and pundits like Al Gore have been so successful at convincing the public that the climate models are reliable for forecasting the future shows that IPCC scientists have a much, much bigger problem with the media misrepresenting their work than I do.

And I don’t see those scientists trying to set that record straight.

Home/Blog

Home/Blog