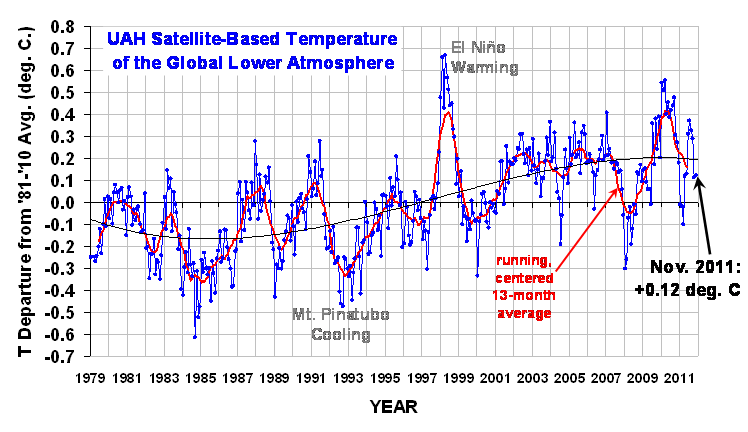

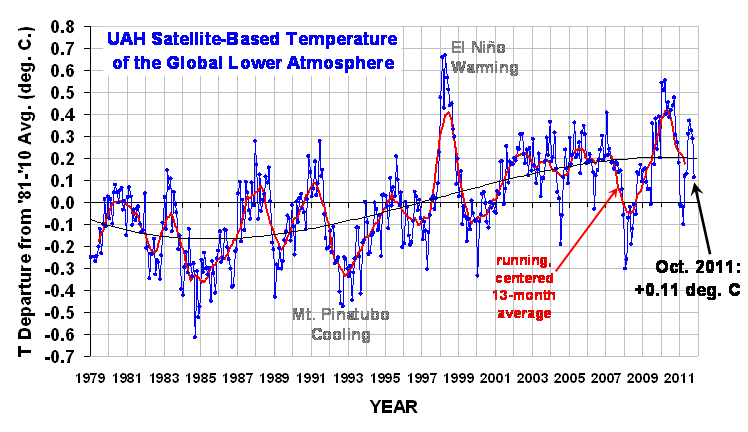

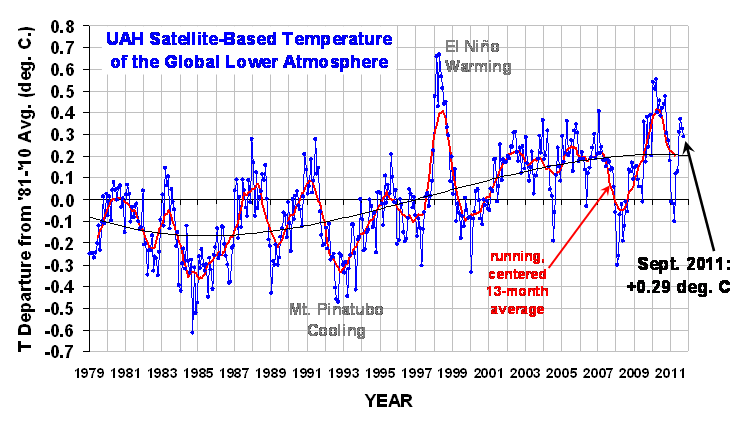

The UAH satellite-based global temperature dataset has reached 1/3 of a century in length, a milestone we marked with a press release in the last week (e.g. covered here).

As a result of that press release, a Capital Weather Gang blog post by Andrew Freedman was dutifully dispatched as damage control, since we had inconveniently noted the continuing disagreement between climate models used to predict global warming and the satellite observations.

What follows is a response by John Christy, who has been producing these datasets with me for the last 20 years:

Many of you are aware that as a matter of preference I do not use the blogosphere to report information about climate or to correct the considerable amount of misinformation that appears out there related to our work. My general rule is never to get in a fight with someone who owns an obnoxious website, because you are simply a tool of the gatekeeper at that point.

However, I thought I would do so here because a number of folks have requested an explanation about a blog post connected to the Washington Post that appeared on 20 Dec. Unfortunately, some of the issues are complicated, so the comments here will probably not satisfy those who want the details and I don’t have time to address all of its errors.

Earlier this week we reported on the latest monthly global temperature update, as we do every month, which is distributed to dozens of news outlets. With 33 years of satellite data now in the hopper (essentially a third of a century) we decided to comment on the long-term character, noting that the overall temperature trend of the bulk troposphere is less than that of the IPCC AR4 climate model projections for the same period. This has been noted in several publications, and to us is not a new or unusual statement.

Suggesting that the actual climate is at odds with model projections does not sit well with those who desire that climate model output be granted high credibility. I was alerted to this blog post within which are, what I can only call, “myths” about the UAH lower tropospheric dataset and model simulations. I’m unfamiliar with the author (Andrew Freedman) but the piece was clearly designed to present a series of assertions about the UAH data and model evaluation, to which we were not asked to respond. Without such a knowledgeable response from the expert creators of the UAH dataset, the mythology of the post may be preserved.

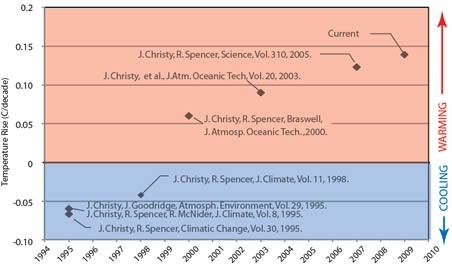

The first issue I want to address deals the relationship between temperature trends of observations versus model output. I often see such posts refer to an old CCSP document (2006) which, as I’ve reported in congressional testimony, was not very accurate to begin with, but which has been superseded and contradicted by several more recent publications.

These publications specifically document the fact that bulk atmospheric temperatures in the climate system are warming at only 1/2 to 1/4 the rate of the IPCC AR4 model trends. Indeed actual upper air temperatures are warming the same or less than the observed surface temperatures (most obvious in the tropics) which is in clear and significant contradiction to model projections, which suggest warming should be amplified with altitude.

The blog post even indicates one of its quoted scientists, Ben Santer, agrees that the upper air is warming less than the surface – a result with which no model agrees. So, the model vs. observational issue was not presented accurately in the post. This has been addressed in the peer reviewed literature by us and others (Christy et al. 2007, 2010, 2011, McKitrick et al. 2010, Klotzbach et al. 2009, 2010.)

Then, some people find comfort in simply denigrating the uncooperative UAH data (about which there have been many validation studies.) We were the first to develop a microwave-based global temperature product. We have sought to produce the most accurate representation of the real world possible with these data – there is no premium in generating problematic data. When problems with various instruments or processes are discovered, we characterize, fix and publish the information. That adjustments are required through time is obvious as no one can predict when an instrument might run into problems, and the development of such a dataset from satellites was uncharted territory before we developed the first methods.

The Freedman blog post is completely wrong when it states that “when the problems are fixed, the trend always goes up.” Indeed, there have been a number of corrections that adjusted for spurious warming, leading to a reduction in the warming trend. That the scientists quoted in the post didn’t mention this says something about their bias.

The most significant of these problems we discovered in the late 1990’s in which the calibration of the radiometer was found to be influenced by the temperature of the instrument itself (due to variable solar shadowing effects on a drifting polar orbiting spacecraft.) Both positive and negative adjustments were listed in the CCSP report mentioned above.

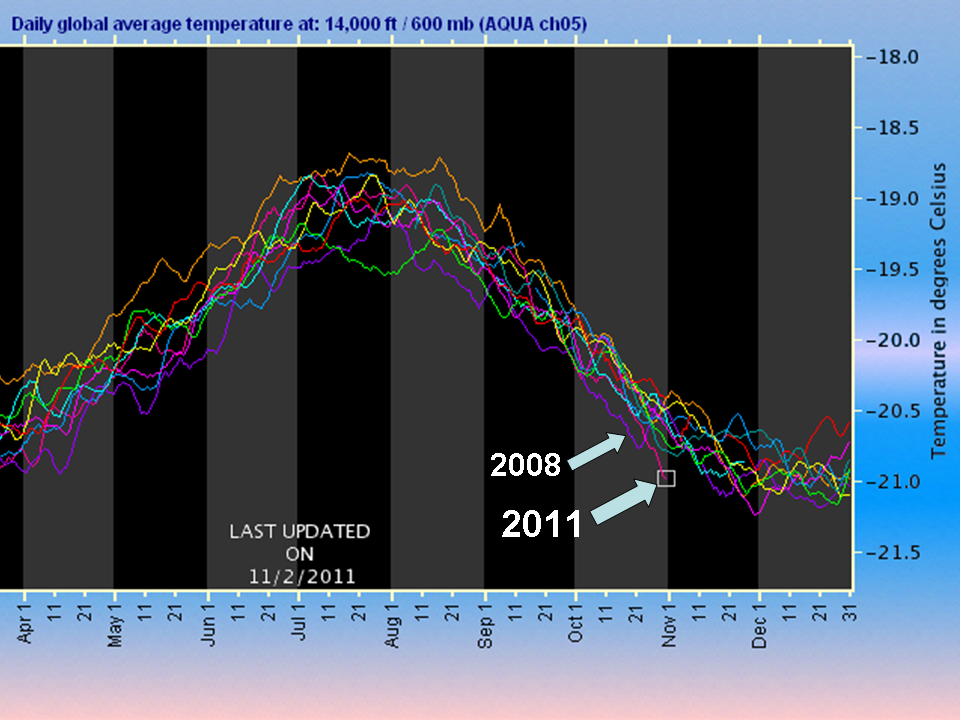

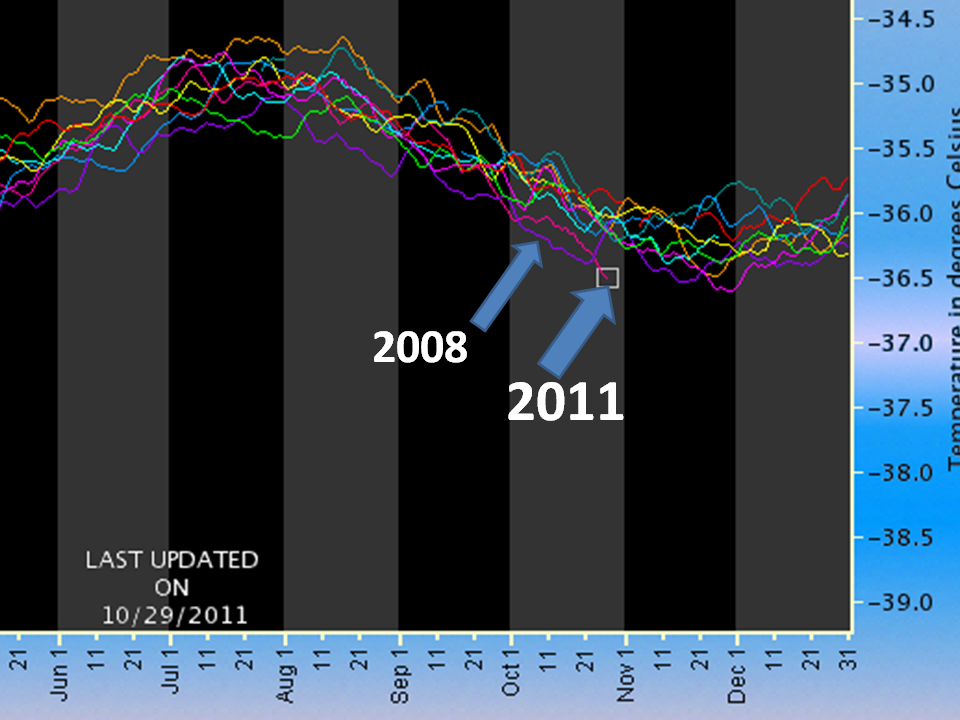

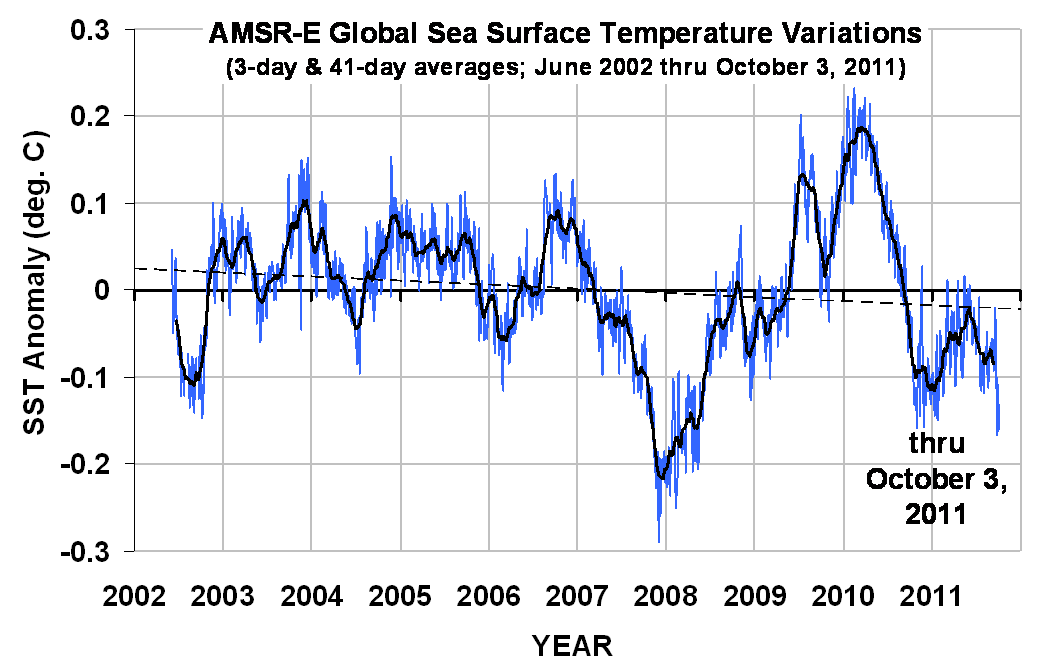

We are always working to provide the best products, and we may soon have another adjustment to account for an apparent spurious warming in the last few years of the aging Aqua AMSU (see operational notes here). We know the data are not perfect (no data are), but we have documented the relatively small error bounds of the reported trends using internal and external evidence (Christy et al. 2011.)

A further misunderstanding in the blog post is promoted by the embedded figure (below, with credit given to a John Abraham, no affiliation). The figure is not, as claimed in the caption, a listing of “corrections”:

The major result of this diagram is simply how the trend of the data, which started in 1979, changed as time progressed (with minor satellite adjustments included.) The largest effect one sees here is due to the spike in warming from the super El Nino of 1998 that tilted the trend to be much more positive after that date. (Note that the diamonds are incorrectly placed on the publication dates, rather than the date of the last year in the trend reported in the corresponding paper – so the diamonds should be shifted to the left by about a year. The 33 year trend through 2011 is +0.14 °C/decade.)The notion in the blog post that surface temperature datasets are somehow robust and pristine is remarkable. I encourage readers to check out papers such as my examination of the Central California and East African temperature records. Here I show, by using 10 times as many stations utilized in the popular surface temperature datasets, that recent surface temperature trends are highly overstated in these regions (Christy et al. 2006; 2009). We also document how surface development disrupts the formation of the nocturnal boundary layer in many ways, leading to warming nighttime temperatures.

That’s enough for now. The Washington Post blogger, in my view, is writing as a convinced advocate, not as a curious scientist or impartial journalist. But, you already knew that.

In addition to the above, I (Roy) would like to address comments made by Ben Santer in the Washington Post blog:

A second misleading claim the (UAH) press release makes is that it’s simply not possible to identify the human contribution to global warming, despite the publication of studies that have done just that. “While many scientists believe it [warming] is almost entirely due to humans, that view cannot be proved scientifically,” Spencer states.

Ben Santer, a climate researcher at Lawrence Livermore National Laboratory in California, said Spencer and Christy are mistaken. “People who claim (like Roy Spencer did) that it is “impossible” to separate human from natural influences on climate are seriously misinformed,” he wrote via email. “They are ignoring several decades of relevant research and literature. They are embracing ignorance.” “Many dozens of scientific studies have identified a human “fingerprint” in observations of surface and lower tropospheric temperature change,” Santer stated.

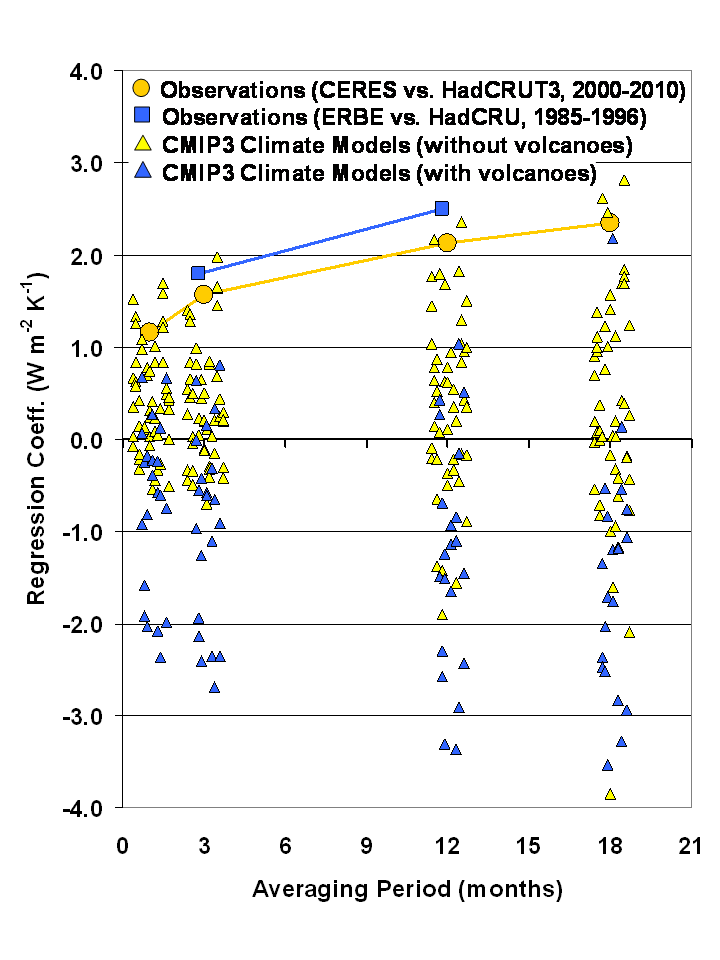

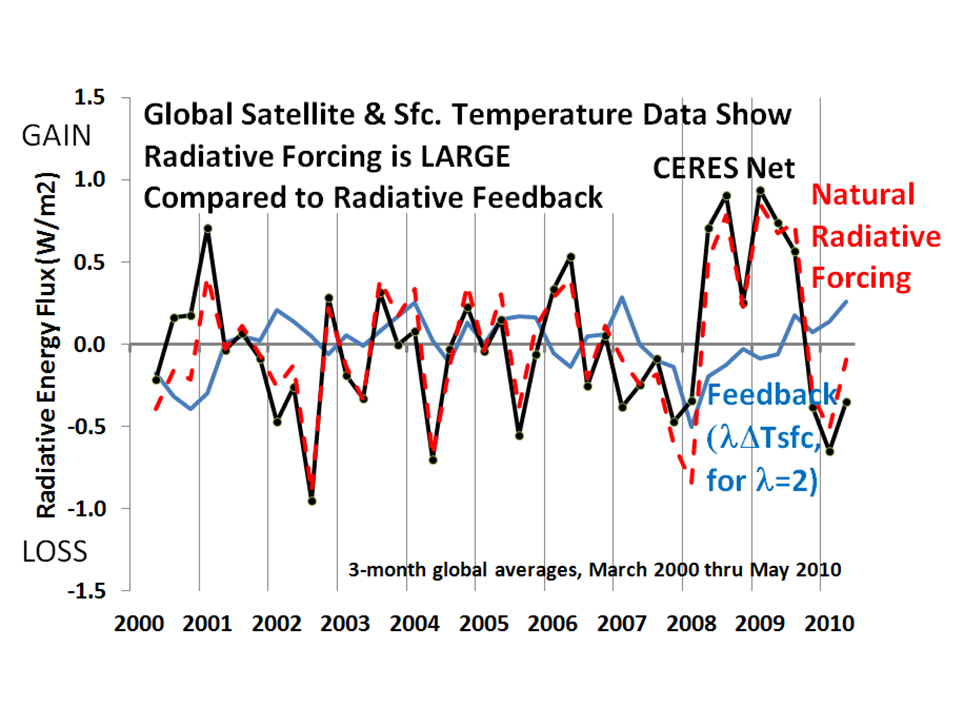

In my opinion, the supposed “fingerprint” evidence of human-caused warming continues to be one of the great pseudo-scientific frauds of the global warming debate. There is no way to distinguish warming caused by increasing carbon dioxide from warming caused by a more humid atmosphere responding to (say) naturally warming oceans responding to a slight decrease in maritime cloud cover (see, for example, “Oceanic Influences on Recent continental Warming“).

Many papers indeed have claimed to find a human “fingerprint”, but upon close examination the evidence is simply consistent with human caused warming — while conveniently neglecting to point out that the evidence would also be consistent with naturally caused warming. This disingenuous sleight-of-hand is just one more example of why the public is increasingly distrustful of the climate scientists they support with their tax dollars.

Home/Blog

Home/Blog