UPDATE: I have been contacted by Andy Dessler, who is now examining my calculations, and we are working to resolve a remaining difference there. Also, apparently his paper has not been officially published, and so he says he will change the galley proofs as a result of my blog post; here is his message:

“I’m happy to change the introductory paragraph of my paper when I get the galley proofs to better represent your views. My apologies for any misunderstanding. Also, I’ll be changing the sentence “over the decades or centuries relevant for long-term climate change, on the other hand, clouds can indeed cause significant warming” to make it clear that I’m talking about cloud feedbacks doing the action here, not cloud forcing.”

Update #2 (Sept. 8, 2011): I have made several updates as a result of correspondence with Dessler, which will appear underlined, below. I will leave it to the reader to decide whether it was our Remote Sensing paper that should not have passed peer review (as Trenberth has alleged), or Dessler’s paper meant to refute our paper.

NOTE: This post is important, so I’m going to sticky it at the top for quite a while.

While we have had only one day to examine Andy Dessler’s new paper in GRL, I do have some initial reaction and calculations to share. At this point, it looks quite likely we will be responding to it with our own journal submission… although I doubt we will get the fast-track, red carpet treatment he got.

While we have had only one day to examine Andy Dessler’s new paper in GRL, I do have some initial reaction and calculations to share. At this point, it looks quite likely we will be responding to it with our own journal submission… although I doubt we will get the fast-track, red carpet treatment he got.

There are a few positive things in this new paper which make me feel like we are at least beginning to talk the same language in this debate (part of The Good). But, I believe I can already demonstrate some of The Bad, for example, showing Dessler is off by about a factor of 10 in one of his central calculations.

Finally, Dessler must be called out on The Ugly things he put in the paper (which he has now agreed to change).

1. THE GOOD

Estimating the Errors in Climate Feedback Diagnosis from Satellite Data

We are pleased that Dessler now accepts that there is at least the *potential* of a problem in diagnosing radiative feedbacks in the climate system *if* non-feedback cloud variations were to cause temperature variations. It looks like he understands the simple-forcing-feedback equation we used to address the issue (some quibbles over the equation terms aside), as well as the ratio we introduced to estimate the level of contamination of feedback estimates. This is indeed progress.

He adds a new way to estimate that ratio, and gets a number which — if accurate — would indeed suggest little contamination of feedback estimates from satellite data. This is very useful, because we can now talk about numbers and how good various estimates are, rather than responding to hand waving arguments over whether “clouds cause El Nino” or other red herrings.

I have what I believe to be good evidence that his calculation, though, is off by a factor of 10 or so. More on that under THE BAD, below.

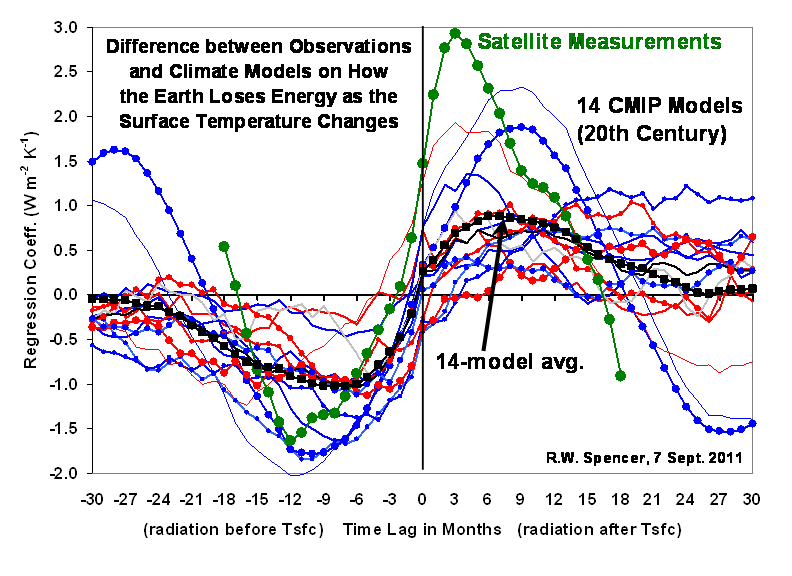

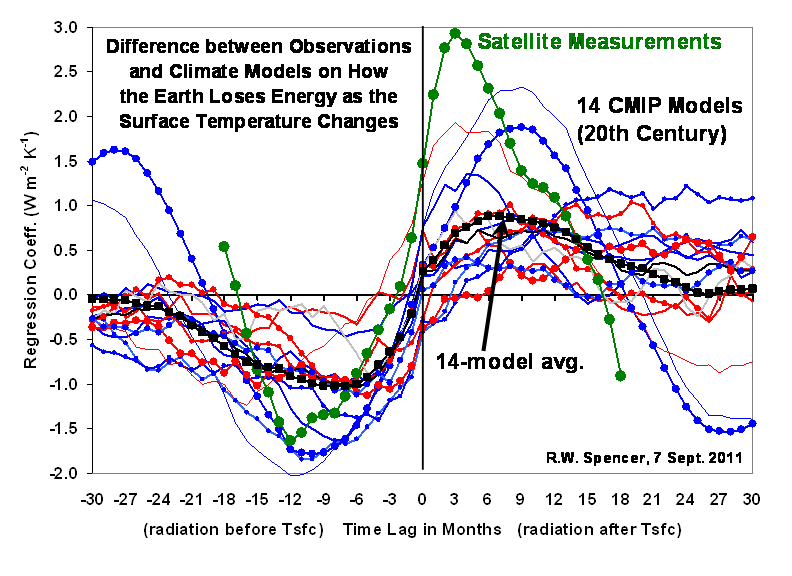

Comparisons of Satellite Measurements to Climate Models

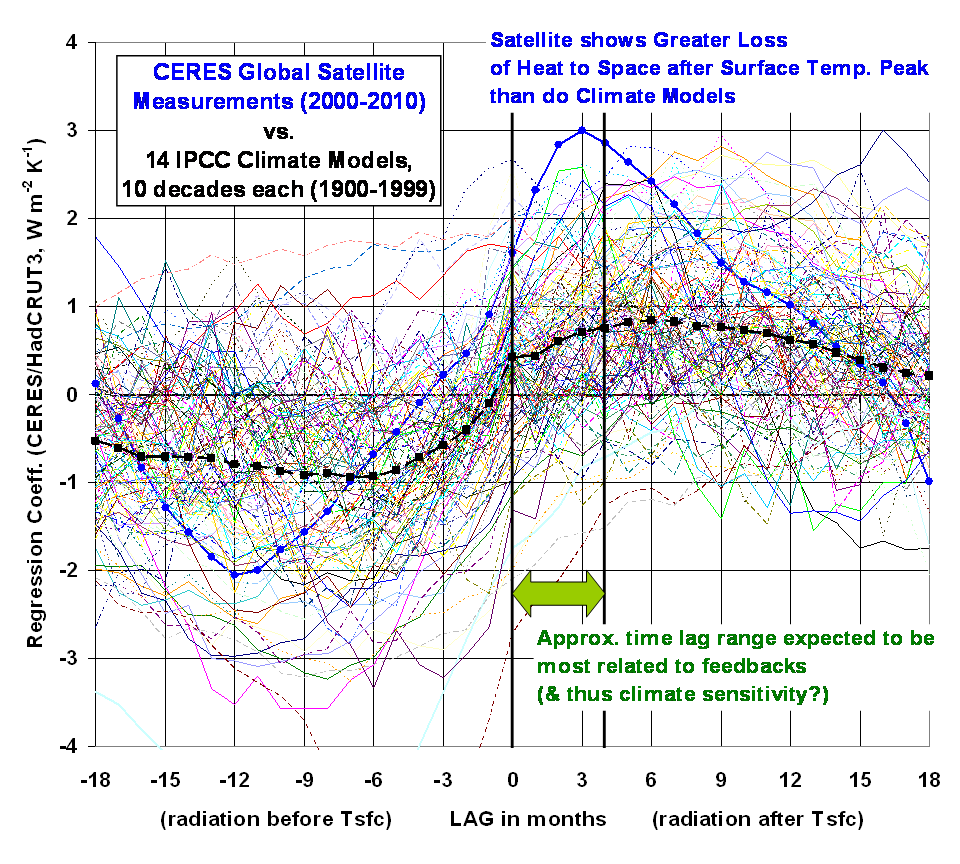

Figure 2 in his paper, we believe, helps make our point for us: there is a substantial difference between the satellite measurements and the climate models. He tries to minimize the discrepancy by putting 2-sigma error bounds on the plots and claiming the satellite data are not necessarily inconsistent with the models.

But this is NOT the same as saying the satellite data SUPPORT the models. After all, the IPCC’s best estimate projections of future warming from a doubling of CO2 (3 deg. C) is almost exactly the average of all of the models sensitivities! So, when the satellite observations do depart substantially from the average behavior of the models, this raises an obvious red flag.

Massive changes in the global economy based upon energy policy are not going to happen, if the best the modelers can do is claim that our observations of the climate system are not necessarily inconsistent with the models.

(BTW, a plot of all of the models, which so many people have been clamoring for, will be provided in The Ugly, below.)

2. THE BAD

The Energy Budget Estimate of How Much Clouds Cause Temperature Change

While I believe he gets a “bad” number, this is the most interesting and most useful part of Dessler’s paper. He basically uses the terms in the forcing-feedback equation we use (which is based upon basic energy budget considerations) to claim that the energy required to cause the observed changes in the global-average ocean mixed layer temperature are far too large to be caused by satellite-observed variations in the radiative input into the ocean brought about by cloud variations (my wording).

He gets a ratio of about 20:1 for non-radiatively forced (i.e. non-cloud) temperature changes versus radiatively (mostly cloud) forced variations. If that 20:1 number is indeed good, then we would have to agree this is strong evidence against our view that a significant part of temperature variations are radiatively forced. (It looks like Andy will be revising this downward, although it’s not clear by how much because his paper is ambiguous about how he computed and then combined the radiative terms in the equation, below.)

But the numbers he uses to do this, however, are quite suspect. Dessler uses NONE of the 3 most direct estimates that most researchers would use for the various terms. (A clarification on this appears below). Why? I know we won’t be so crass as to claim in our next peer-reviewed publication (as he did in his, see The Ugly, below) that he picked certain datasets because they best supported his hypothesis.

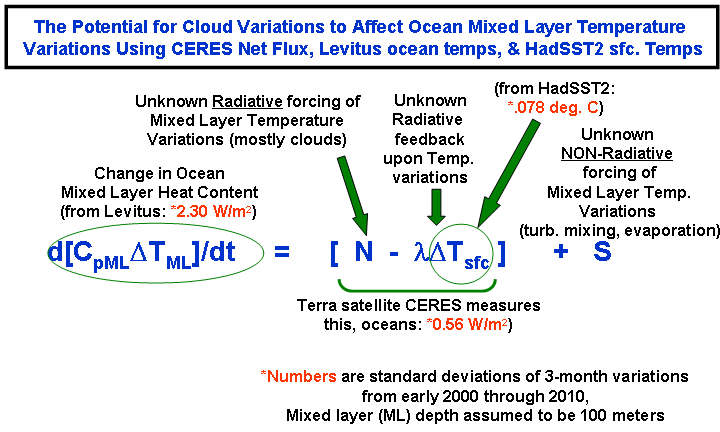

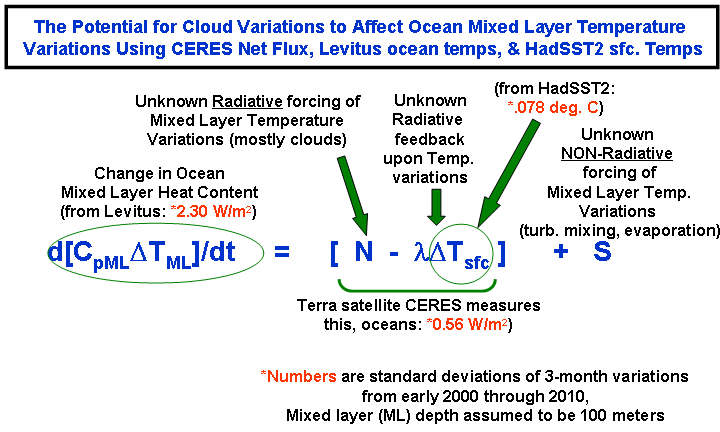

The following graphic shows the relevant equation, and the numbers he should have used since they are the best and most direct observational estimates we have of the pertinent quantities. I invite the more technically inclined to examine this. For those geeks with calculators following along at home, you can run the numbers yourself:

Here I went ahead and used Dessler’s assumed 100 meter depth for the ocean mixed layer, rather than the 25 meter depth we used in our last paper. (It now appears that Dessler will be using a 700 m depth, a number which was not mentioned in his preprint. I invite you to read his preprint and decide whether he is now changing from 100 m to 700 m as a result of issues I have raised here. It really is not obvious from his paper what he used).

Using the above equation, if I assumed a feedback parameter λ=3 Watts per sq. meter per degree, that 20:1 ratio Dessler gets becomes 2.2:1. If I use a feedback parameter of λ=6, then the ratio becomes 1.7:1. This is basically an order of magnitude difference from his calculation.

Again I ask: why did Dessler choose to NOT use the 3 most obvious and best sources of data to evaluate the terms in the above equation?:

(1) Levitus for observed changes in the ocean mixed layer temperature; (it now appears he will be using a number consistent with the Levitus 0-700 m layer).

(2) CERES Net radiative flux for the total of the 2 radiative terms in the above equation, (this looks like it could be a minor source of difference, except it appears he put all of his Rcld variability in the radiative forcing term, which he claims helps our position, but running the numbers will reveal the opposite is true since his Rcld actually contains both forcing and feedback components which partially offset each other.)

(3): HadSST for sea surface temperature variations. (this will likely be the smallest source of difference)

The Use of AMIP Models to Claim our Lag Correlations Were Spurious

I will admit, this was pretty clever…but at this early stage I believe it is a red herring.

Dessler’s Fig. 1 shows lag correlation coefficients that, I admit, do look kind of like the ones we got from satellite (and CMIP climate model) data. The claim is that since the AMIP model runs do not allow clouds to cause surface temperature changes, this means the lag correlation structures we published are not evidence of clouds causing temperature change.

Following are the first two objections which immediately come to my mind:

1) Imagine (I’m again talking mostly to you geeks out there) a time series of temperature represented by a sine wave, and then a lagged feedback response represented by another sine wave. If you then calculate regression coefficients between those 2 time series at different time leads and lags (try this in Excel if you want), you will indeed get a lag correlation structure we see in the satellite data.

But look at what Dessler has done: he has used models which DO NOT ALLOW cloud changes to affect temperature, in order to support his case that cloud changes do not affect temperature! While I will have to think about this some more, it smacks of circular reasoning. He could have more easily demonstrated it with my 2 sine waves example.

Assuming there is causation in only one direction to produce evidence there is causation in only one direction seems, at best, a little weak.

2) In the process, though, what does his Fig. 1 show that is significant to feedback diagnosis, if we accept that all of the radiative variations are, as Dessler claims, feedback-induced? Exactly what the new paper by Lindzen and Choi (2011) explores: that there is some evidence of a lagged response of radiative feedback to a temperature change.

And, if this is the case, then why isn’t Dr. Dessler doing his regression-based estimates of feedback at the time lag of maximum response, as Lindzen now advocates?

Steve McIntyre, who I have provided the data to for him to explore, is also examining this as one of several statistical issues. So, Dessler’s Fig. 1 actually raises a critical issue in feedback diagnosis he has yet to address.

3. THE UGLY

(MOST, IF NOT ALL, OF THESE OBJECTIONS WILL BE ADDRESSED IN DESSLER’S UPDATE OF HIS PAPER BEFORE PUBLICATION)

The new paper contains a few statements which the reviewers should not have allowed to be published because they either completely misrepresent our position, or accuse us of cherry picking (which is easy to disprove).

Misrepresentation of Our Position

Quoting Dessler’s paper, from the Introduction:

“Introduction

The usual way to think about clouds in the climate system is that they are a feedback… …In recent papers, Lindzen and Choi [2011] and Spencer and Braswell [2011] have argued that reality is reversed: clouds are the cause of, and not a feedback on, changes in surface temperature. If this claim is correct, then significant revisions to climate science may be required.”

But we have never claimed anything like “clouds are the cause of, and not a feedback on, changes in surface temperature”! We claim causation works in BOTH directions, not just one direction (feedback) as he claims. Dr. Dessler knows this very well, and I would like to know:

1) what he was trying to accomplish by such a blatant misrepresentation of our position, and

2) how did all of the peer reviewers of the paper, who (if they are competent) should be familiar with our work, allow such a statement to stand?

Cherry picking of the Climate Models We Used for Comparison

This claim has been floating around the blogosphere ever since our paper was published. To quote Dessler:

“SB11 analyzed 14 models, but they plotted only six models and the particular observational data set that provided maximum support for their hypothesis. “

How is picking the 3 most sensitive models AND the 3 least sensitive models going to “provide maximum support for (our) hypothesis”? If I had picked ONLY the 3 most sensitive, or ONLY the 3 least sensitive, that might be cherry picking…depending upon what was being demonstrated.

And where is the evidence those 6 models produce the best support for our hypothesis? I would have had to run hundreds of combinations of the 14 models to accomplish that. Is that what Dr. Dessler is accusing us of?

Instead, the point of using the 3 most sensitive and 3 least sensitive models was to emphasize that not only are the most sensitive climate models inconsistent with the observations, so are the least sensitive models.

Remember, the IPCC’s best estimate of 3 deg. C warming is almost exactly the warming produced by averaging the full range of its models’ sensitivities together. The satellite data depart substantially from that. I think inspection of Dessler’s Fig. 2 supports my point.

But, since so many people are wondering about the 8 models I left out, here are all 14 of the models’ separate results, in their full, individual glory:

I STILL claim there is a large discrepancy between the satellite observations and the behavior of the models.

CONCLUSION

These are my comments and views after having only 1 day since we received the new paper. It will take weeks, at a minimum, to further explore all of the issues raised by Dessler (2011).

Based upon the evidence above, I would say we are indeed going to respond with a journal submission to answer Dessler’s claims. I hope that GRL will offer us as rapid a turnaround as Dessler got in the peer review process. Feel free to take bets on that. 🙂

And, to end on a little lighter note, we were quite surprised to see this statement in Dessler’s paper in the Conclusions (italics are mine):

“These calculations show that clouds did not cause significant climate change over the last decade (over the decades or centuries relevant for long-term climate change, on the other hand, clouds can indeed cause significant warming).”

Long term climate change can be caused by clouds??! Well, maybe Andy is finally seeing the light! 😉 (Nope. It turns out he meant ” *RADIATIVE FEEDBACK DUE TO* clouds can indeed cause significant warming”. An obvious, minor typo. My bad.)

Home/Blog

Home/Blog